by Philippe Valoggia (Luxembourg Institute of Science and Technology)

Ethical AI/AS are systems that do not infringe human rights. We might fail to develop such systems due to a lack of common understanding of what quality requirements to meet (i.e., the rights and freedoms of users), and because actors involved in the systems’ development do not collaborate in a consistent way. We propose a risk-based engineering approach to overcome these two engineering pitfalls.

Advanced digital technologies such as Artificial Intelligence and Autonomous Systems (AI/AS) open up promising opportunities to tackle economic, societal, and environmental challenges. But their use also gives rise to certain concerns related to security, privacy, and ethics. To prevent privacy issues, the EU General Data Protection Regulation (GDPR) [L1] introduced the obligation to engineer personal data-based products and services that deliberately protect the rights and freedoms of individuals (Data Protection by Design and by Default, Art. 25).

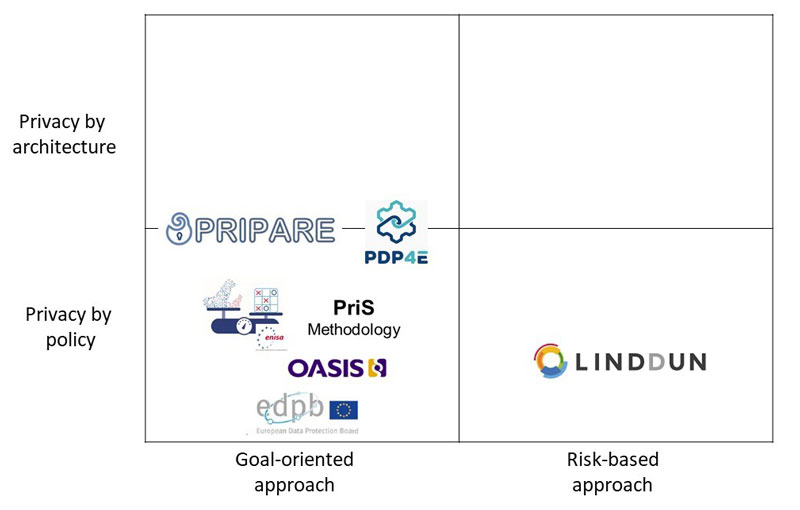

Protection of human rights is a critical quality requirement of any system or product handling personal data. Field observations and literature reviews raise doubt on the ability of current privacy engineering approaches to properly support the development of privacy-preserving products and systems. Literature related to privacy engineering usually adopts a goal-oriented approach to specify privacy quality requirements of a system (see Figure 1).

Figure 1: Main privacy-by-design methodologies organised along their approach and their focus.

Protection of rights and freedoms is achieved throughout the completion of privacy-protection goals. Confidentiality, security, integrity, unlikability, transparency, autonomy, etc. have become usual privacy quality requirements of systems handling personal data. The practicability of these goals is sometimes questionable, but more importantly, the measure of their achievement is still not well established, and their respective contribution to the protection of human rights is either hazardous or not specified.

Data protection through technology development is usually presented as a multidisciplinary challenge: it requires combining different fields of knowledge to properly meet privacy quality requirements. By breaking down the protection of human rights into several goals, these approaches implicitly introduce a division of work: experts involved in the design process are likely to specialize in the achievement of only a single or a couple of goals. Experts then operate in silos, and their attention is drawn to the satisfaction of one privacy quality requirement rather than to the protection of people.

The first works related to ethics and AI seem to favour a goal-oriented engineering approach. Indeed, various AI principles are proposed to specify quality requirements of trustworthy AI-based systems. The emergence of disciplinary communities specialising in the fulfilment of one of these principles as robustness or explainability has been observed. Ethical engineering is therefore likely to face the same engineering pitfalls as privacy engineering.

Neither privacy engineering nor ethical engineering is doomed to fail to properly protect human rights. It is proposed to adopt a risk-based approach to overcome the two mentioned goal-oriented engineering approach pitfalls. Quality requirement specification consists then of identifying risks that advanced digital systems poses to human rights. From a methodological point of view, a risk-based approach is the key means to specify and to measure critical requirements that cannot be quantified as risks to rights and freedoms of natural persons.

A risk-based approach to specify and measure quality requirements is consistent with the data protection EU legal framework, which is presented as a risk-based regulation. GDPR introduces the obligation to measure the impact of a system on the protection of personal data (Data Protection Impact Assessment – DPIA, art. 35). DPIA is the only recognised indicator of the protection of human rights by the law. It is logical to use it to measure the satisfaction of privacy quality requirements when developing systems handling personal data.

The first proposal of EU’s Artificial Intelligence Act seems to adopt a risk-based approach too. Article 9 states the obligation to implement risk management systems for AI-based systems. In addition to its consistency with regulatory framework, a risk-based approach to engineering is likely to minimise the disciplinary silos effect mentioned above. Indeed, considered as a network object (Latour, 2005), risk helps to smooth out disciplinary borders by drawing attention to a common purpose.

Although a risk-based approach to engineering appears as a promising way to tackle the challenges of both privacy and ethical engineering, some investigations are still required to make it happen. It is first necessary to define a risk model that applies to specify risk factors and their interrelations. Second, the assumption that risk is the appropriate network object to ease effective multidisciplinary work when developing advanced technologies-based systems has to be verified. After having designed a privacy risk management assessment tool based on seminal works conducted by Perry [2], we plan to test its reliability and its impacts on multidisciplinary work throughout different privacy and ethical engineering projects, as is suggested by the design science methodological approach.

Links:

[L1] https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52021PC0206

[L2] https://www.list.lu/en/research/

References:

[1] B. Latour, “Reassembling the Social, An introduction to Actor-Network Theory”, Oxford University Press, 2005.

[2] S. Perry, “The future of privacy, Private life and public policy”, Demos, 1998.

Please contact:

Philippe Valoggia

Luxembourg Institute of Science and Technology, Luxembourg