by Sara Ferreira (University of Porto), Mário Antunes (Polytechnic of Leiria) and Manuel E. Correia (University of Porto)

Tampered multimedia content is increasingly being used in a broad range of cybercrime activities. The spread of fake news, misinformation, digital kidnapping, and ransomware-related crimes are among the most recurrent crimes in which manipulated digital photos are being used as an attacking vector. One of the linchpins of accurately detecting manipulated multimedia content is the use of machine learning and deep learning algorithms. This work proposed a dataset of photos and videos suitable for digital forensics, which has been used to benchmark Support Vector Machines (SVM) and Convolution Neural Networks algorithms (CNN). An SVM-based module for the Autopsy digital forensics open-source application has also been developed. This was evaluated as a very capable and useful forensic tool, winning second place on the OSDFCon international Autopsy modules competition.

Cybercrime is challenging national security systems all over the world, with malicious actors taking advantage of and exploiting human and technical vulnerabilities. The widespread global reach of cyberattacks, their level of sophistication and impact on society have also been reinforced by the pandemic we are all currently enduring. This has raised a global awareness of how dependent we now are on the Internet to carry out normal daily activities, and how vulnerable we all are to fraud and other criminal activities in cyberspace.

Deepfakes use artificial intelligence to replace the likeness of one person with another in video and other digital media. They can inflict severe reputational and other kinds of damage to their victims. Coupled with the reach and speed of social media, convincing deepfakes can quickly reach millions of people, negatively impacting society in general. Deepfake attacks may have different motivations like fake news, revenge porn, and digital kidnapping, usually involving underage or otherwise vulnerable victims and possibly associated with ransomware blackmailing. Digital forensics analysis of such cases, when conducted manually and solely by the means of a human operator, can be very time-consuming and highly inefficient in identifying and collecting complete and meaningful digital evidence of cybercrimes, often because of the misclassification of files.

Effective forensic tools are essential, as they have the ability to reconstruct evidence left by cybercriminals when they perpetrate a cyberattack. However, an increasing number of highly sophisticated tools make life much easier for cybercriminals to carry out complex digital attacks. The criminal investigator is thus faced with a very difficult challenge in trying to keep up with these cyber-criminal operational advantages. Autopsy [L1] () is a digital forensics tool that helps to level the field. It is open-source and widely used by criminal investigators to analyse and identify digital evidence of suspicious activities. Autopsy incorporates a wide range of native modules to process digital objects, including images (on raw disks), and it allows the community to develop more modules for more specialised forensic tasks.

Machine Learning (ML) has boosted the automated detection and classification of digital artifacts for forensic investigative tools. Existing ML techniques to detect manipulated photos and videos are seldom fully integrated into forensic applications. Therefore, ML-based Autopsy modules, capable of detecting deepfakes, are relevant and will most certainly be very much appreciated by the investigative authorities [1]. Well proven ML methods for deepfake detection have not yet been fully translated into substantial gains for cybercrime investigation, as those methods have not often been incorporated into the most popular state-of-the-art digital forensics tools. In this work [1, 2, 3] we made the following contributions:

- A labelled and balanced dataset composed of about 52,000 examples of genuine and manipulated photos and videos that incorporates the most common manipulation techniques, namely splicing, copy-move and deepfaking [3].

- An SVM-based model capable of processing multimedia files and detecting those that were digitally manipulated. The model processes a set of simple features extracted by applying a Discrete Fourier Transform (DFT) method to the input file.

- The development of two ready-to-use Autopsy modules to detect the fakeness level of digital photos and input video files, respectively [L2, L3].

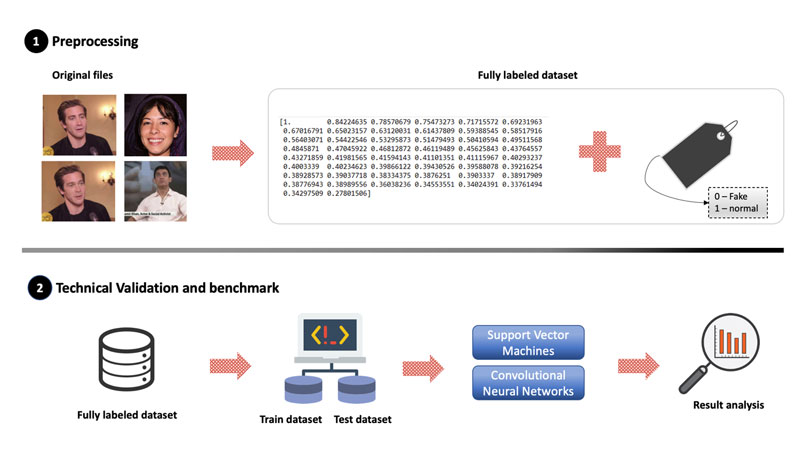

The overall architecture is shown in Figure 1. It is composed of two main stages: pre-processing, and technical validation and benchmark of the dataset [2].

Figure 1: Overall architecture of the preprocessing and technical validation of the dataset.

Pre-processing consists of reading the photos and taking up to four frames per second from the input videos through the OpenCV library. By having all the photos in the dataset, the features’ extraction is made by applying the DFT method to generate labelled input datasets for both training and testing. The processing phase corresponds to the SVM and CNN processing. The implementation of SVM processing was made through the scikit-learn library for Python 3.9. The model created by SVM at the processing phase, is used to get a “fake” score for each photo in the testing dataset. The tests were carried out with a 5-fold cross-validation, by splitting the dataset into five equal parts and using four for training and one for testing. These two phases were incorporated in a developed standalone application, which was further integrated as two separated Autopsy modules [L4].

The deliverables obtained with this research, namely the ready-to-use Autopsy modules, can give a helping hand to digital forensics investigators and leverage the use of ML techniques to fight cybercrime activities that involve multimedia files. The low processing time and the high performance obtained with the DFT-SVM method make it eligible to be incorporated as a plugin that may be used easily and in real time, to detect the fakeness level of multimedia content spread in social networks.

Links:

[L1] https://www.autopsy.com/

[L2] https://github.com/saraferreirascf/Photos-Videos-Manipulations-Dataset

[L3] https://github.com/saraferreirascf/Photo-and-video-manipulations-detector

[L4] https://www.osdfcon.org/2021-event/2021-module-development-contest/

References:

[1] S. Ferreira, M. Antunes, M.E. Correia: “Forensic Analysis of Tampered Digital Photos”, in: Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2021, Springer LNCS 2021, vol 12702, pp. 461-470.

https://doi.org/10.1007/978-3-030-93420-0_43

[2] S. Ferreira, M. Antunes, M.E. Correia: “Exposing Manipulated Photos and Videos in Digital Forensics Analysis”, Journal of Imaging, 2021; 7(7):102.

https://doi.org/10.3390/jimaging7070102

[3] S. Ferreira, M. Antunes, M.E. Correia: “A Dataset of Photos and Videos for Digital Forensics Analysis Using Machine Learning Processing”, Data, 2021; 6(8):87. https://doi.org/10.3390/data6080087

Please contact:

Manuel E. Correia

University of Porto, Portugal