by Barbara Leporini (ISTI-CNR) and Maria Teresa Paratore (ISTI-CNR)

People with special needs face specific challenges in everyday activities. People with visual impairments, for example, have problems with orientation and mobility; moreover, they face serious issues when it comes to accessing information and services or perceiving the surrounding environment. Technologies such as the Internet of Things (IoT) and artificial systems can offer interesting solutions to overcome these limitations and can support users with special needs in an inclusive way.

With human communication and provision of services becoming increasingly reliant on computers and smartphones, it is important to consider different users’ requirements in order to build an inclusive society. Concepts such as confidence in, and awareness of, artificial devices and digital content need to be addressed and personalised according to user characteristics and preferences. Confidence is essential, since it allows humans to tackle both known and unfamiliar tasks with hope, optimism, and resilience. Awareness enables confidence, because the more we know about the task we have to perform and about the agent with which we are interacting, the more confident we feel; this is even more true for people with special needs (e.g. visual impairments).

As an example, consider an app conceived to guide users through a complex building such as an airport; it will provide information about the available services (e.g., ATMs, check-in points, toilets), their locations, opening hours, and how they can be reached. Many projects have developed apps for this purpose, but few have considered incorporating tactile information to assist people with visual impairments. TIGHT (Tactile InteGration between Humans and arTificial systems) is a project whose main goal is to help humans achieve awareness of their environment via novel tactile communication paradigms [L1].

The tactile channel is still under-exploited as a means to provide hints in assistive and common applications; in the context of the project, the tactile sense is considered to be an effective way of exchanging information to help the user perceive their environment. Useful information for orientating, moving, and accessing specific contents can be conveyed via wearable haptic devices, which can be exploited to perceive the touch through the body [1]. TIGHT will use knowledge from the recent field of wearable haptics to tackle the technological and neuroscientific challenges of developing wearable haptic interfaces. Applications of such interfaces include orientation, guidance and information communication for people with visual impairments. Haptic interfaces can be used in domains such as domotics, education, leisure and navigation, and mobility applications. Indoor navigation applications have been widely adopted to assist people with visual impairments since they effectively increase social inclusion and autonomy [2]. The continual evolution of technologies related to sensors and mobile devices have increased the popularity of mobile applications for indoor localisation. Wi-Fi, radio-frequency identification (RFID), Bluetooth Low Energy (BLE) and Ultra Wide Band (UWB) have been adopted to build ad-hoc infrastructures that are located within public buildings and urban areas.

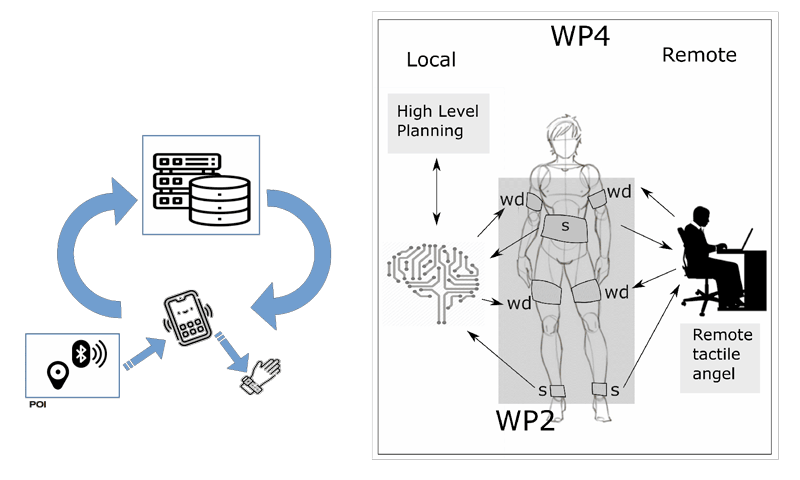

Figure 1: The typical flow of information between the various components in a mobile navigation system that exploits haptic feedback and an excerpt from the TIGHT project documentation, showing the role of haptic feedbacks in the project itself.

The most important requirement for an indoor positioning system is that it makes users aware of the surrounding space by guiding them through the environment and signalling relevant places or objects, known as Points of Interest (POIs); another fundamental feature is disclosing information related to POIs (e.g. descriptions associated to works of art in a mobile museum guide, or the opening hours of shops in a shopping mall). A typical architecture for an indoor mobile navigation app must hence include at least two layers, one to discover the POIs signalled by the hardware infrastructure, the other to convey information to the user in a proper way, and here is where wearable haptics come into play. Tactile stimuli are an effective means to signal the presence of POIs to visually impaired users, and to guide them through a path in the presence of physical obstacles. An example of such an architecture is shown in Figure 1. The architecture can be generalised with respect to the hardware adopted for the positioning strategy. In our example we assumed to be using Bluetooth Low Energy transmitters (AKA BLE beacons), which are extremely popular, thanks to their ease of installation, low power consumption and fair localisation accuracy; infrastructures based on BLE beacons are in fact often present in public urban areas and public buildings of most of our cities. A beacon transmits its unique identifier, so that it can be recognised by any nearby device that is equipped with a Bluetooth sensor. A UID can be used to retrieve information related to the corresponding POI from a remote server. Information will then be processed and presented to the user. The figure shows how each POI signals its presence to the Bluetooth sensor shipped on the smartphone via the associated BLE beacon; as soon as the mobile app on the smartphone detects the presence of the POI, it will issue a request to a remote server asking for details about the place or the object represented by the POI itself. Haptic stimuli to guide the user may be issued via the smartphone itself and/or a wearable component such as a bracelet. Haptic stimuli may be assigned a semantic value; different tactile hints may be associated to specific features of a POI, such as its position and its distance from the user’s current position. In order to tailor the retrieved information upon the user’s needs, a further personalisation layer may be present; actually, such a layer is advisable in order to provide a better user experience.

A solution based on user localisation and haptic notifications issued by transmitters placed within the surrounding environment can be applied in many different use cases, not only involving users with special needs. The use of the haptic channel is in fact very suitable to perceive contents in a non-intrusive way without having to overload the other senses, allowing them to be delegated to other tasks.

Link:

[L1] http://si.isti.cnr.it/index.php/hid-project-category-list/214-project-tight

References:

[1] F. Barontini, et al.: “Integrating Wearable Haptics and Obstacle Avoidance for the Visually Impaired in Indoor Navigation: A User-Centered Approach”. IEEE Transactions on Haptics 14(1), 109-122, 2021. https://doi.org/10.1109/TOH.2020.2996748

[2] D. Plikynas, et al.: “Indoor Navigation Systems for Visually Impaired Persons: Mapping the Features of Existing Technologies to User Needs”, Sensors 20(3), 636, 2020. https://doi.org/10.3390/s20030636

Please contact:

Barbara Leporini, ISTI-CNR, Italy

Maria Teresa Paratore, ISTI-CNR, Italy