by Gaia Pavoni, Massimiliano Corsini and Paolo Cignoni (ISTI-CNR)

In recent decades, benthic populations have been subjected to recurrent episodes of mass mortality. These events have been blamed in part on declining water quality and elevated water temperatures (see Figure 1) correlated to global climate change. Ecosystems are enhanced by the presence of species with three-dimensional growth. The study of the growth, resilience, and recovery capability of those species provides valuable information on the conservation status of entire habitats. We discuss here a state-of-the art solution to speed up the monitoring of benthic population through the automatic or assisted analysis of underwater visual data.

![Figure 1: Spring-2019 Coral Bleaching event in Moorea Dead (Pocillopora) colonies have been covered by algae. Source: Berkeley Institute of Data Science [L3] Figure 1: Spring-2019 Coral Bleaching event in Moorea Dead (Pocillopora) colonies have been covered by algae. Source: Berkeley Institute of Data Science [L3]](/images/stories/EN121/pavoni1.jpg)

Figure 1: Spring-2019 Coral Bleaching event in Moorea Dead (Pocillopora) colonies have been covered by algae. Source: Berkeley Institute of Data Science [L3].

Ecological monitoring provides essential information to analyse and understand the current condition and persisting trends of marine habitats, to quantify the impacts of bounded and extensive events, and to assess the resilience of animal and plant species.

The underwater world is a hostile working environment for humans, with researchers’ activities being limited in time and space. Large-scale exploration requires underwater vehicles. The use of autonomous data-driven robotics for acquiring underwater image data is making large-scale underwater imaging increasingly popular. Nevertheless, video and image sequences are a trustworthy source of knowledge doomed to remain partially unexploited. A recent study [1] reported that just 1-2% of the millions of underwater images acquired each year on coral reefs by the National Oceanic and Atmosphere Administration (NOAA) are later analysed by experts. Automated solutions could help overcome this bottleneck.

Underwater photogrammetry represents a useful technology for obtaining reliable measurements and monitoring benthic populations at different spatial scales. Detecting temporal variations in both biotic and abiotic space holders is a challenging task, demanding a high degree of accuracy and fine-scale resolution. The detailed optical spatial-temporal analysis involves the acquisition of a massive stream of data. Evaluating changes in the benthos at a scale reflective of the growth and dissolution rates of its constituents requires a pixel-wise classification. This task, called semantic segmentation, is highly labor-intensive when conducted manually. Current manual workflows generate highly accurate and precise segmentation for fine-scale colony mapping but they demand about one hour per square metre.

The automatic extraction of information can contribute enormously to environmental monitoring efforts and, more generally, to our understanding of climate change. This operation can be performed automatically by using Convolutional Neural Networks (CNNs). Nevertheless, the automatic semantic segmentation of benthic communities remains a challenging task owing to the complexity and high intra-specific morphological variability of benthic organisms, as well as by the numerous artefacts related to the underwater image formation process.

In our work, we propose to carry out the classification and the outlining of species from seabed ortho-mosaics. From a machine learning perspective, ortho-mosaics displays a reduced variance of distinctive class features, simplifying the task of automatic classification.

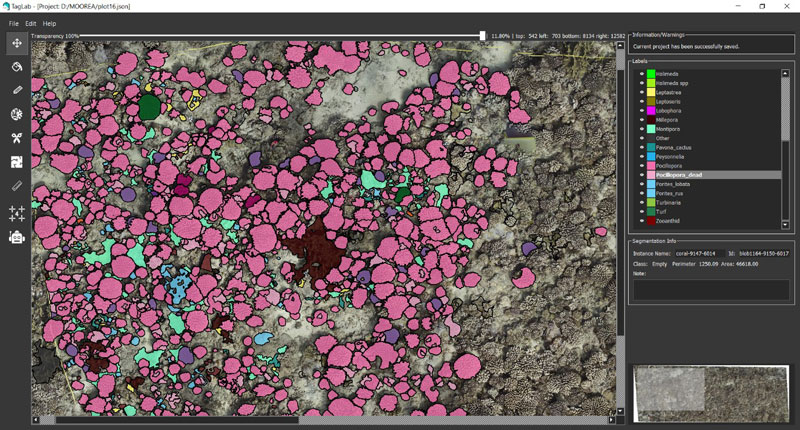

Figure 2: TagLab – an interactive semi-automatic tool for semantic segmentation and annotations of coral reef otho-mosaics.

While fully automated semantic segmentation can significantly reduce the amount of processing time, current state-of-the-art solutions still lack the accuracy provided by human experts. Besides, the automation of such specific processes requires specific tools to prepare the data and provide control over the results. So, we propose a human-in-the-loop approach in which a skilled operator and supervised learning neural networks cooperate through a user-friendly interface to achieve the required degree of accuracy. This can be seen as an interactive segmentation cycle for the automatic analysis of benthic communities: intelligent tools assist users in the labelling of large-scale training datasets. Exploiting these data, CNNs are trained to classify coral species, and predictions inferred in new areas. In the evaluation of predictions, the human operator intervenes again to correct both ML-based and human annotation errors. This maximises accuracy when it comes to extracting demographic statistics and creating new datasets to train more performing networks. For this goal, we have developed a custom software tool: TagLab (see Figure 2).

TagLab is an AI-powered configurable annotation tool designed to speed up human labelling of large maps. TagLab dramatically reduces the dataset’s preparation time and brings the accuracy of CNN’s segmentation up to a domain expert level. Following the human-computer interaction paradigm, this software integrates a hybrid approach based on multiple degrees of automation. Assisted labelling is supported by CNN-based networks specially trained for agnostic (relative only to object partition) or semantic (also related to species) segmentation of corals. An intuitive graphical user interface (GUI) speeds up the human editing of uncertain predictions, increasing the overall accuracy. More precisely, TagLab integrates:

- Fully-automatic per-pixel classifiers based on the fine-tuning of the semantic segmentation network Deeplab V3+ [3];

- A semi-automatic interactive per-pixel classifier to perform the agnostic segmentation following the extreme clicking approach of Deep Extreme Cut [2].

- A Refinement algorithm to quickly adjust the labelling of colonies contours.

- Manual annotation tools to edit the per-pixel predictions. This allows makes it possible to reach a level of accuracy not achievable through standard machine learning methods alone.

At the end of the process, TagLab outputs annotated maps, statistics, as well as new training datasets. Besides, multiple projects can be managed for the multi-temporal comparison of labels. Resuming, Taglab provides dataset preparation, data analysis, and validations of predictions, in an integrated way. Different, separated, software applications usually perform most of these processing steps.

TagLab has been successfully adopted by important long term monitoring programs: the 100 Island Challenge [L2] headed by the Scripps Institution of Oceanography (UC, SAN DIEGO) and the Moorea Island Digital Ecosystem Avatar headed by ETH Zurich and the Marine Science Institute (UC Santa Barbara) [L3].

Links:

[L1] https://github.com/cnr-isti-vclab/TagLab

[L2] http://100islandchallenge.org/

[L3] https://bids.berkeley.edu/research/moorea-island-digital-ecosystem-avatar-moorea-idea

References:

[1] O. Beijbom, et al.: “Improving Automated Annotation of Benthic Survey Images Using Wide-band Fluorescence”. Scientific Reports, 201.

[2] K. K. Maninis, et al.: “Deep Extreme Cut: From Extreme Points to Object Segmentation”, Computer Vision and Pattern Recognition (CVPR), 2018.

[3] L. Chen ,et al.: “Encoder-Decoder with Atrous Separable Convolution for Semantic Image”, European Conference on Computer Vision, (ECCV), 2018.

Please contact:

Gaia Pavoni

ISTI-CNR, Italy