by Jacco van Ossenbruggen (CWI)

Research in the humanities typically involves studying specific and potentially subjective interpretations of (historical) sources, whilst the computational tools and techniques used to support such questions aim at providing generic and objective methods to process large volumes of data. We claim that the success of a digital humanities project depends on the extent it succeeds in making an appropriate match of the specific with the generic, and the subjective with the objective. Trust in the outcome of a digital humanities study should be informed by a proper understanding of this match, but involves a non-trivial fit for use assessment.

Modern computational tools are often more advanced and exhibit better performance than their less advanced predecessors. These performance improvements come, however, with a price in terms of increased complexity and diminished understanding of what actually happens inside the black box that many tools have become.

Our argument is that scholars in the humanities need to understand the tools they use to the extent that they can assess the limitations of each tool, and how these limitations might impact the outcomes of the study in which the tool is deployed. This level of understanding is necessary, both to be able to assess to what extent the generic task for which the tool has been designed fits the specific problem being studied, and to assess to what extent the limitations of a tool in the context of this specific problem may result in unanticipated side effects that lead to unintended bias or errors in the analysis that threaten the assumed objectivity of each computational step.

Our approach to address this problem is to work closely with research partners in the humanities, and to build together the next generation of e-infrastructures for the digital humanities that allow scholars to make these assessments in their daily research.

This requires more than technical work. For example, to raise awareness for this need, we have recently teamed up with partners from the Utrecht Digital Humanities Lab and the Huygens ING to organise a second workshop in July 2017 around the theme of tool criticism. A recurring issue during these workshops was the need for humanity scholars to be better trained in computational thinking, while at the same time computer scientists (including big data analysts and e-science engineers) need to better understand what information they need to provide to scholars in the humanities to make these fit for use assessments possible.

In computer science, the goal is often to come up with generic solutions by abstracting away from overly specific problem details. Assessing the behaviour of an algorithm in such a specific context is not seen as the responsibility of the computer scientist, while the black box algorithms are insufficiently transparent to transfer this responsibility to the humanities scholar. Making black box algorithms more transparent is thus a key challenge and a sufficiently generic problem to which computer scientists like us can make important contributions. In this context, we collaborate in a number of projects with the Dutch National Library (KB) to measure algorithmic bias and improve algorithmic transparency. For example, in the COMMIT/ project, CWI researcher Myriam Traub is investigating the influence of black box optical character recognition (OCR) and search engine technology on the accessibility of the library’s extensive historical newspaper archive [1]. Here, the central question is: can we trust that the (small) part of the archive that users find and view through the online interface is actually a representative view of the entire content of the archive? And, if the answer is no, is this bias caused by a bias in the interest of the users or of a bias implicitly induced by the technology? Traub et al. studied the library’s web server logs with six months of usage data, a period in which around a million unique queries had been issued. Traub concluded that indeed only a small fraction (less than 4%) of the overall content of the archive was actually viewed, and that this was not a representative sample at all. The bias however, was largely attributed to a bias in the interest of the users, with a much smaller bias caused by the “preference” of the search engine for medium length articles, which leads to an underrepresentation of overly short and long articles in the search result. Follow-up research is currently focussing on the impact of the OCR on retrievability bias.

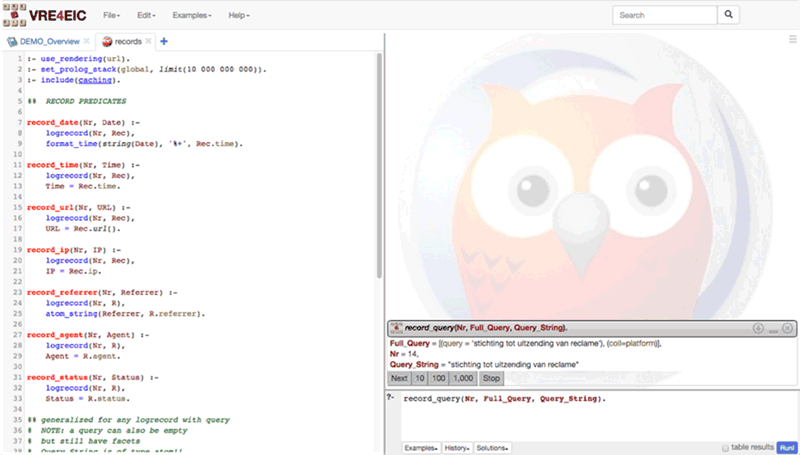

Screenshot of a SWISH executable notebook disclosing the full computational workflow in a search log analysis use case.

In the ERCIM-coordinated H2020 project VRE4EIC [L1] we collaborate with FORTH, CNR and other partners on interoperability across virtual research environments. At CWI, Tessel Bogaard, Jan Wielemaker and Laura Hollink are developing software infrastructure [2] to support improved transparency in the full data science pipeline: involving data selection, cleaning, enrichment, analysis and visualisation. Part of the reproducibility and other aspects related to transparency might be lost if these steps are spread over multiple tools and scripts in a badly documented research environment. Again using the KB search logs as a case study, another part of the challenge lies in creating a transparent workflow on top of a dataset that is inherently privacy sensitive. For the coming years, the goal is to create trustable computational workflows, even if the data necessary to reproduce a study is not available.

Link:

[L1] https://www.vre4eic.eu

References:

[1] M. C. Traub, et al.: “Querylog-based Assessment of Retrievability Bias in a Large Newspaper Corpus”, JCDL 2016: 7-16.

[2] J. Wielemaker, et al.: “Cliopatria: A Swi-Prolog infrastructure for the Semantic Web. Semantic Web”, 7(5), 529-541, 2016.

Please contact:

Jacco van Ossenbruggen

CWI, The Netherlads

+31 20 592 4141