by Thierry Fraichard (Inria)

Self-driving vehicles are here and they already cause accidents. Now, should road safety be considered in a trial and error perspective or should it be addressed in a formal way? The latter option is at the heart of our research.

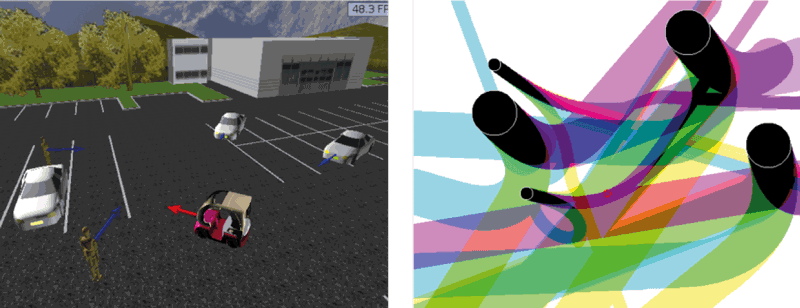

Self-driving technologies have improved to the point that major industrial players are now investing in, developing and testing self-driving vehicles in various countries. It is touted that fleets of self-driving vehicles will be operational within five years. Besides economic reasons, a major incentive behind self-driving vehicles is safety. Road traffic deaths across the world reached 1.25 million in 2015 and since the driver is responsible for most crashes, it seems natural to strive to design self-driving vehicles. Obviously, an autonomous vehicle is safe if it avoids collision. Now, collisions happen for different reasons: hardware or software failures, perceptual errors that result in an incorrect understanding of the situation (in May 2016, a self-driving vehicle crashed killing its driver because its camera failed to recognise a white truck against a bright sky), and reasoning errors, i.e., a wrong decision is made (in February 2016, a self-driving vehicle was for the first time responsible for a crash because of a wrong decision). Our research focuses on reasoning errors. At a fundamental level, a collision will happen as soon as a vehicle reaches an inevitable collision state (ICS), i.e., a state for which, no matter what the vehicle does, a collision will eventually occur [1].

The insights on collision avoidance resulting from our investigation of the ICS concept are expressed succinctly by the following quote: ‘One has a limited time only to make a motion decision, one has to globally reason about the future evolution of the environment and do so over an appropriate time horizon.’

As abstract and general as this quote may seem, it implicitly contains motion safety laws whose violation is likely to cause a collision [2]. ICS were initially investigated with the aim of designing motion strategies for which collision avoidance could be formally guaranteed. Assuming the availability of an accurate model of the future up to the appropriate time horizon, we were able to design motion strategies with guaranteed absolute motion safety i.e., no collision ever takes place.

Figure 1: A self-driving vehicle among fixed and moving obstacles (left); 2D slice of the 5D state space of the self-driving vehicle, the black areas are the corresponding inevitable collision states that must be avoided (right).

In the real world, things are not so rosy since accurate information about the future evolution of the environment is not available. The choice then is between conservative or probabilistic models of the future. In conservative models, each obstacle is assigned its reachable set, i.e., the set of positions it can potentially occupy in the future. Conservative models solve the problem of the discrepancy between the predicted future and the actual future. In theory, they could allow for guaranteed motion safety. In practice however, the growth of the reachable sets as time passes by is such that every position becomes potentially occupied by an obstacle and it is then impossible to find a safe solution. In probabilistic models, the position of an obstacle at any given time is represented by an occupancy probability density function. Such models are well suited to represent the uncertainty that prevails in the real world. However, as sound as the probabilistic framework is, it cannot provide motion safety guarantees that can be established formally, minimising the collision risk is the only thing that can be done then. Today, most, if not all, self-driving vehicles rely upon probabilistic modelling and reasoning to drive themselves. The current paradigm is to test self-driving vehicles in vivo and, should a problem occur, to patch the self-driving system accordingly. In an effort to improve the situation and to provide provable motion safety guarantees, we would like to advocate an alternative approach that can be summarised by the following motto: “Better guarantee less than guarantee nothing.”

The idea is to settle for levels of motion safety that are weaker than absolute motion safety but that can be guaranteed. One example of such a weaker level of motion safety guarantees that, if a collision must take place, the self-driving vehicle will be at rest. This motion safety level has been dubbed passive motion safety and it has been used in several autonomous vehicles. Passive motion safety is interesting for two reasons: (i) it allows provision of at least one form of motion safety guarantee in challenging scenarios (limited field-of-view for the robot, complete lack of knowledge about the future behaviour of the moving obstacles [3]), and (ii) if every moving obstacle in the environment enforces it then no collision will take place at all.

We are currently exploring more sophisticated levels of motion safety. We are also studying the relationship between the perceptual capabilities of the self-driving vehicles and the levels of motion safety that can be achieved. The long-term goal of our research is to investigate if and how current self-driving technologies can be improved to the point that the human driver can safely be removed from the driving loop altogether, paving the way to truly self-driving vehicles whose motion safety can be formally characterised and guaranteed.

References:

[1] T. Fraichard, H. Asama: “Inevitable collision states. a step towards safer robots?”, Advanced Robotics, vol. 18, no. 10, 2004.

[2] T. Fraichard: “Will the driver seat ever be empty?” INRIA, Research Report RR-8493, Mar. 2014. [Online]. http://hal.inria.fr/hal-00965176

[3] S. Bouraine, T. Fraichard, H. Salhi: “Provably safe navigation for mobile robots with limited field-of-views in dynamic environments," Autonomous Robots, vol. 32, no. 3, Apr. 2012.

Please contact:

Thierry Fraichard

Inria Grenoble Rhône-Alpes, France