by Robert Rößler, Thomas Kadiofsky, Wolfgang Pointner, Martin Humenberger and Christian Zinner (AIT)

Research at AIT on autonomous land vehicles is focusing on transport systems and mobile machines which operate in unstructured and heavily cluttered environments such as off-road areas. In such environments, conventional technologies used in the field of advanced driver assistance systems (ADAS) and highly automated cars show severe limitations in their applicability. Therefore, more general approaches for environmental perception have to be found to provide adequate information for planning and decision making. As a special challenge, our focus is on vehicles that actively change their environment, e.g., by cutting high plants or manipulating piles of pellet materials. To operate such machines autonomously, novel approaches for autonomous motion planning are required.

In our latest research activity, a tractor (type Steyr 6230 CVT) was provided by the Austrian Armed Forces for the purpose of automating agricultural tasks on special areas. In a first step, this mobile machine was prepared in close cooperation with the manufacturer CNH Austria to serve as a mobile research platform. An electrical single-point interface provides access to the various functions of the tractor via actuators and the CAN bus system, which enables a set of additional computers and dedicated software to take over control. The vehicle is equipped with a multi-modal sensor system combining stereo vision, laser scanning, RTK-GPS and IMU to address difficult and varying outdoor conditions. A dedicated AIT stereo vision system mounted behind the windshield at the front and another on the rear of the vehicle provide dense 3D data of the tractor’s environment (Figure 1).

Figure 1: Top left: research platform Steyr 6230 CVT, Top right: additional computers running dedicated software (3x Intel i7 Ivy Bridge), Bottom: windshield mounted AIT stereo camera system (light blue).

An important step to further increase efficiency in farming is to develop completely driverless machines which would, for example, enable one operator to monitor and control multiple machines at once. Thus, AIT investigates important computer vision based technologies and methods that improve the abilities of future autonomous vehicles and machines for agricultural applications. Furthermore, research is driven by a specific use-case defined by the Austrian Armed Forces: cultivation (e.g., mulching, mowing) of firebreaks on military training areas endangered by explosive ordnances. The technology developed by AIT allows the operator to safely monitor and control the semiautonomous machine from a safe distance with a high level of situational awareness.

The sensed 3D data of the stereo camera systems [1] and the laser scanner is continuously fused into an ‘elevation map’ – a 2.5D representation of the terrain. As the vehicle moves, the reconstruction of the vehicle’s surroundings becomes more and more complete. An essential task is estimating the ego-motion and the pose of the vehicle. The trajectories of the stereo visual odometries, the orientation of the inertial measurement unit and the position of the RTK-GPS are fused with an extended Kalman filter. The filter models the kinematics of the vehicle and computes a precise estimate of the pose [2]. Furthermore, the geometry of the elevation map is analysed in order to obtain a traversability map, which denotes drivable and non-drivable areas as well as obstacles around the vehicle. The map is organised using a tile based approach, which allows storing, loading and updating large scale maps. In that way the georeferenced mapped areas can also be accessed via a geographic information system.

The real-time traversability map is used by the path planning module to calculate a collision free trajectory along a predefined mission path. Dynamically appearing obstacles are avoided by calculating a local bypass route using a sampling-based motion planning algorithm [3]. If rerouting is not successful, the vehicle stops safely. A pilot module generates drive commands based upon the relative position of the tractor to the planned path. These commands are executed by the interface module controlling the tractor actuators.

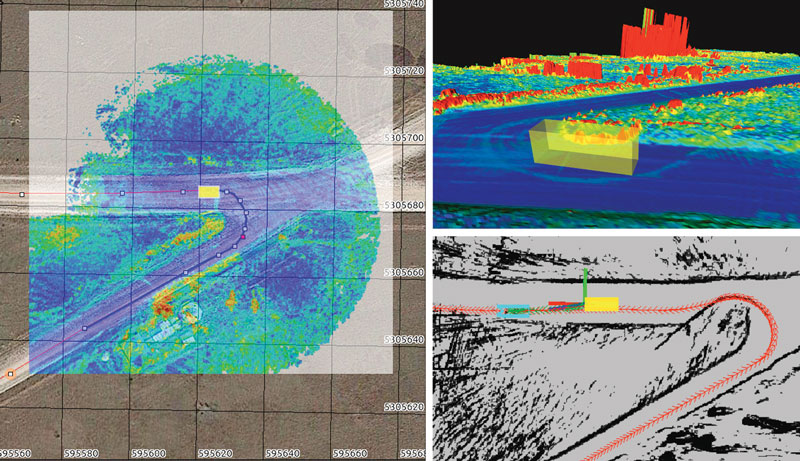

The information collected by the vehicle including camera streams, the vehicle’s trajectory, the computed map data, etc. is transmitted to a base station, where the operator can access it through a graphical user interface. Choosing between different visualisation modes allows for better evaluation of complex situations. The possibilities to overlay satellite images with live map data and 3D reconstruction of the vehicle’s environment increase the situational awareness of the operator and ensure efficient and safe handling (Figure 2).

Figure 2: Left: satellite image (Google Maps) overlaid with real-time traversability map; planned path and waypoints (red), vehicle trajectory (blue), Top right: 3D reconstruction of vehicle surroundings coloured by traversability from blue (good) to red (bad/obstacle), Bottom right: real-time path planning; planned path (red arrows), next planned vehicle position (cyan).

Vehicle tasks are planned within the graphical user interface on the basis of satellite images and sensor data. For interaction and control of the system, common user-friendly input devices like a mouse and a game controller are used. The vehicle supports different modes of operation, which represent different levels of autonomy:

- manual teleoperation

- a semi-autonomous mode called ‘Click&Drive’

- following a planned path fully autonomous.

In ‘Click&Drive’ mode the next waypoint can be set directly or special manoeuvres such as Y-turns can be triggered by the operator. The vehicle executes these commands using its autonomous capabilities of path planning and collision avoidance.

With the developed system, AIT is able to address a real safety problem (cultivating areas endangered by explosive ordnances) which currently lacks appropriate solutions within the available off-the-shelf technologies, thus showing the potential of autonomous systems that will be operating in the near future. Simultaneously, research on human machine interaction and environmental perception and segmentation for machines that are changing their environment during operation is pressed ahead.

Link:

http://www.ait.ac.at/themen/3d-vision/autonomous-land-vehicles/

References:

[1] M. Humenberger et al.: “A fast stereo matching algorithm suitable for embedded real-time systems”, J.CVIU, 114 (2010), 11.

[2] S. Thrun et al., “Probabilistic Robotics”. MIT Press, Cambridge 2005, ISBN 9780262201629.

[3] S. Karaman, E. Frazzoli: “Sampling-based Algorithms for Optimal Motion Planning”, IJRR, 30 (2011), 7.

Please contact:

Robert Rößler, Thomas Kadiofsky, Wolfgang Pointner, Martin Humenberger, Christian Zinner

AIT Austrian Institute of Technology GmbH, Austria

E-mail: {robert.roessler, thomas.kadiofsky, wolfgang.pointner, martin.humenberger, christian.zinner}@ait.ac.at