by Péter Gáspár, Tamás Szirányi, Levente Hajder, Alexandros Soumelidis, Zoltán Fazekas and Csaba Benedek (MTA SZTAKI)

A project was launched at the Institute ‒ relying on interdepartmental synergies ‒ with the intention of joining the autonomous vehicles R&D arena. In the frame of the project, demonstrations of the added capabilities ‒ appreciably making use of multi-modal sensor data ‒ in respect of path-planning, speed control, curb detection, road and lane following, road structure detection, road sign and traffic light detection and obstacle detection are planned in controlled traffic environments.

The development of intelligent – and particularly self-driving – cars has been a hot topic for at least two decades now. It ignited heated competition initially among various university and R&D teams, later among vehicle engineering enterprises and more recently among high-profile car manufacturers and IT-giants. An autonomous car relies on many functions and comprises numerous subsystems that support its autonomous capabilities. These include route-planning, path-planning, speed control, curb detection, road and lane detection and following, road structure detection, auto-steering, road sign and traffic light detection and recognition, obstacle detection and adaptive cruise control, vehicle overtaking, auto-parking [1]. Apart from the mechanical state of the car and the geometrical and mechanical properties of the actual road, it is important to know the geographical context and the kind of environment the car moves in to achieve vehicular autonomy.

To gain first-hand experience in this hot R&D arena and to implement some of the aforementioned functions, a project for adding autonomous vehicle features ‒ together with associated automatic control ‒ to a production electric car was launched by the Computer and Automation Research Institute, Budapest, Hungary in 2016. Two of the institute’s research laboratories are involved in the project: one which deals with the theory and the application of automatic control (among various other fields in the area of vehicle dynamics and control), and one which is engaged in machine perception. These closely related overlapping research interests and experiences offer a great opportunity for R&D synergy.

A Nissan Leaf electric car was fitted up with a heavy-duty roof rack, which supports an array of sensors, namely six RGB cameras and two 3D scanning devices: a LIDAR with 16 and one with 64 beams. A further LIDAR with 16 beams is mounted onto the front of the car. These devices view and scan the spatial environment around the host car and explore the traffic and road conditions in the vicinity. The test car is also equipped with a high-precision navigation device ‒ which includes an inertial measurement kit ‒ and a professional vehicle-diagnostic device which can access data from the built-in vehicular sensors that are necessary for the autonomous control. Given that the car is fully electric and equipped with a host of computing and measurement facilities with considerable power consumption, provisions for recharging the batteries had to be made: a fast charge-point was installed in the institute’s carport.

Figure 1: A host of image and range sensors are mounted onto the electric test car.

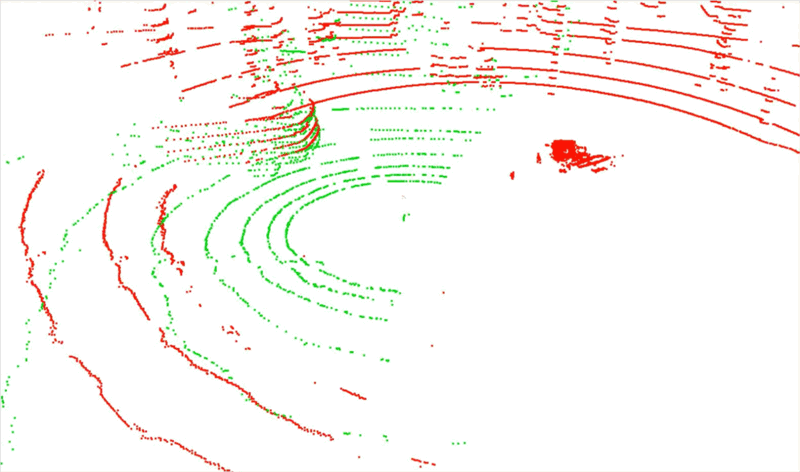

In order to obtain metric information from the image and the range sensors, they need to be calibrated to each other. However, the data coming from LIDARs and RGB cameras, respectively, are of different modalities: the former build up spatial point clouds, while the latter form colour image sequences. Recently, a fast and robust calibration method ‒ applicable to either, or both of these modalities ‒ was developed [2]. The method uses an easy-to-obtain fiducial (e.g., a cardboard box of uniform colour). This is an advantage over other methods as it allows the sensors to be transferred between vehicles when required. The sensor calibrations ‒ required because of the different geometrical configurations that can be conveniently set up on different vehicles ‒ can be carried out without much fuss. The idea is that the mentioned fiducial can be easily detected both in 2D and 3D spaces, thereby the calibration problem is transformed into one of 2D-3D registration. Two point clouds which have been registered using this approach are shown merged in Figure 2.

Figure 2: Two point clouds recorded simultaneously in street traffic with the two small LIDARs ‒ mounted on the car shown in Figure 1 ‒ are merged after registration. The green dots represent the point cloud taken with the front LIDAR (not visible in Figure 1), while the red dots are from the small (visible) LIDAR on the roof rack.

The data collection and recording of trajectory, video, point cloud and vehicular sensor data is performed in urban areas on public roads near Budapest. Presently, detection of cars, pedestrians, road structures and buildings are on the agenda [3]. The collected data is used in the design, simulation and testing of the algorithms and modules implementing the autonomous operation of the car. The test runs ‒ testing the autonomous features and control developed for the project car ‒ will initially take place in closed industrial areas and on test courses.

This kind of data can also be utilised in various mapping, surveying, measurement and monitoring applications in conjunction with roads (e.g., for assessing the size distribution of potholes, for measuring the effective width of snow/mud/water-covered roads), traffic (e.g., measuring the length and the composition of vehicle queues) and built environment (e.g., detecting changes in the road layout, detecting roadwork sites, broken guard-rails, skew traffic signs).

Link:

https://www.sztaki.hu/en/science/projects/lab-autonomous-vehicles

References:

[1] Z. Fazekas, P. Gáspár, Zs. Biró and R. Kovács (2014) Driver behaviour, truck motion and dangerous road locations – unfolding from emergency braking data. TRE, 65, 3-15.

[2] B. Gálai, B. Nagy and Cs. Benedek (2016) Cross-modal point cloud registration in the Hough space for mobile laser scanning data. IEEE ICPR, 3363-3368.

[3] D. Varga and T. Szirányi (2016) Detecting pedestrians in surveillance videos based on convolutional neural network and motion. EUSIPCO, 2161-2165.

Please contact:

Péter Gáspár, MTA SZTAKI, Hungary

+36 1 279 6171