by Dimitrios Serpanos (University of Patras and ISI), Howard Shrobe (CSAIL/MIT) and Muhammad Taimoor Khan (University of Klagenfurt)

Behaviour-based security enables industrial control systems to address a wider range of risks than classical IT security, providing an integrated approach to detecting security attacks, dependability failures and violation of safety properties.

Cyber-physical systems are increasingly employed for the control and management of processes, ranging from cars and traffic lights to medical devices; from industrial floors to nuclear power generation and distribution. Importantly, industrial control systems (ICS), a large class of cyber-physical systems composed of specialised industrial computers and networks, are employed to control and manage critical national infrastructure, such as power, transportation and health infrastructure. ICS are now vital to the welfare of nations and individuals, and consequently these systems are increasingly becoming targets of cyber-attacks as evidenced by a number of recent sophisticated incidents, such as Stuxnet and the hacking of cars and health devices.

Industrial control systems differ from traditional IT systems in several ways, from their purpose to ownership and maintenance; they support processes instead of people, they interface with physical systems, they are typically owned by engineers and their requirements for real-time continuous operation necessitate different approaches to upgrade and maintenance. These characteristics have led them to be known as OT (Operational Technology), instead of IT, and they are often targeted by novel attacks that are different from the traditional IT attacks. For example, in addition to the typical computational or networking attacks, where malicious software is inserted into a system or transmitted to it through a network, a new attack that so far has only inflicted OT systems is ‘false data injection’ (FDI): instead of attacking the computers or the networks of sensor-based systems, an attacker attacks the sensors and inserts false data (measurements) in order to lead the system to a wrong decision, although no malicious software is running and network packets are not manipulated in any way. FDI attacks can be quite powerful and can lead to catastrophic results as simple experiments easily demonstrate (for water networks, power networks, etc.). A second class of novel attacks involve timing disruptions in which the control system is prevented from issuing new commands within the time constant of the system under control; these attacks can be achieved through subtle disruption of the networks that carry sensor data to the controller or by the introduction of parasitic computations on the host whose only goal is to slow down the controller.

Traditional computer and network security has been addressing the problem of fortifying systems and networks for IT, but has been quite limited in addressing systems with real-time requirements and has not considered FDI or timing disruption attacks. The traditional approach to detect malicious processes and/or traffic is through two basic methods: static and dynamic. Static analyses focus on static characteristics of processes and packets, such as signatures, while dynamic ones focus on process and traffic behaviour. Traditionally, behaviour is defined as patterns of resource usage, such as memory and i/o in a computing system, network ports, source and destination addresses, connections, etc. This approach leads to pattern-based behaviour definition, where several directions exist: one can define bad behaviour patterns, monitor and try to detect when one (or more) occur, or define good behaviour patterns, monitor and try to detect deviation from them.

We follow a different approach to define behaviour and build secure industrial control systems. We define behavior as the (executable) specification of an application. Based on this definition, we develop ICS that execute the (executable) specification in parallel with the application code, we monitor the application code execution and identify deviations between specification execution, which produces predictions, and the application code execution, which produces observations. Importantly, this approach to behaviour definition enables systems to detect all types of behavioural deviations from the specification, independently of motive, i.e., both malicious and accidental, integrating security with fault tolerance in the same approach.

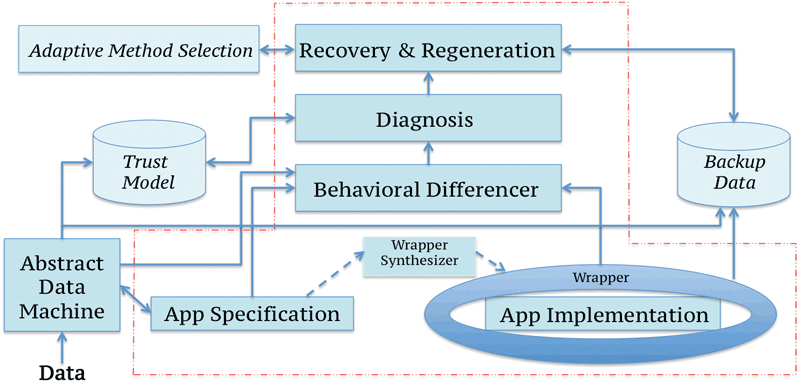

Figure 1: ARMET, a middleware system for ICS.

Exploiting this approach, we have been developing ARMET, a middleware system for ICS shown in Figure 1, working in three main security research directions:

- Safe code derivation from specifications, based on the FIAT approach [1], in order to develop safe application code (App Implementation in Figure 1) with specified security properties from the application specification (App Specification)

- Monitor development (Behavioural Differencer) that accurately detects (without false positives or negatives) deviations between application specification and execution [2], and

- Methods to identify vulnerabilities for false data injection attacks and encode them in the Abstract Data Machine, so that the monitor can protect against them. Our work has already provided promising results for power (smartgrid) systems [3].

This approach is practical today, capitalising on the significant recent advances in software verification and formal methods that enable analyses of large programs, and especially in cyber-physical and ICS, which implement specific processes or applications (‘plants’ in control system terminology). Advantages include automated development of safe code and design and implementation of robust monitors that can identify when security properties are violated.

References:

[1] B. Delaware, et al.: “Fiat: Deductive Synthesis of Abstract Data Types in a Proof Assistant”, in Proc. of POPL'15. Jan. 2015.

[2] M.T. Khan, D. Serpanos, H. Shrobe: “Sound and Complete Runtime Security Monitor for Application Software”, arXiv:1601.04263 [cs.CR].

[3] S. Gao et al.: “Automated Vulnerability Analysis of AC State Estimation under Constrained False Data Injection in Electric Power Systems”, in Proc. of IEEE CDC’15. Dec. 2015.

Please contact:

Dimitrios Serpanos,

University of Patras and ISI, Greece,

Tel: +30 261 091 0299