by Nisrine Jafri, Axel Legay and Jean-Louis Lanet (Inria)

Fault-injection exploits hardware weaknesses to perturbate the behaviour of embedded devices. Here, we present new model-based techniques and tools to detect such attacks developed at the High-Security Laboratory at Inria.

Embedded systems are computer systems with a dedicated function within larger mechanical or electrical systems. Unlike classical computer units, they are embedded as part of a complete device often including hardware and mechanical parts. Embedded systems control many common devices, including smart phones, cars, smart cities, and robots. The number of embedded systems is growing continuously at a rate of more than 10% per annum, and by 2020 there are predicted to be over 40 billion devices worldwide (five to ten embedded devices per person on earth). It is thus very important that the engineering process for embedded systems development includes techniques to assess a wide range of safety/availability/security requirements.

‘Fault injection’ is an attack where the hardware is used to create exploitable errors at the software level. This includes modifying a value read from memory and modifying the program flow using different techniques including: laser, voltage modification, or even clock glitches [1]. The Row Hammer attack [2] is a classic example of a fault injection. The idea behind the Row Hammer attack was to design a program that repeatedly accesses a certain row of transistors in the DRAM memory. The effect was to stress the row until the charge from that row leaks into the next one. This electromagnetic leakage can cause a bit flip such that transistors in the adjacent row of memory have their state inverted.

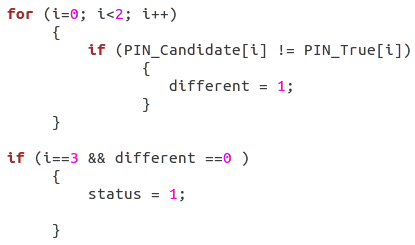

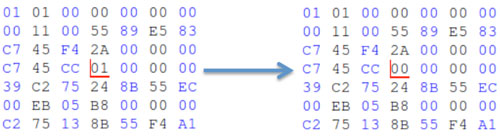

The power of fault injection can be illustrated with a simple PIN verify program. This C program, which is described in Figure 1, compares a PIN candidate with the true PIN. In the case that the two are equals, variable status is set to 1, otherwise it remains at 0. Observe that if the two PINs are different there is no way to obtain status=1 simply by exploiting the software layer. However, this can be done by mutating the value of the x86 binary code. Figure 2, which represents the binary code for Figure 1, shows this modification via the mutation of one bit that corresponds to the value of status. This mutation can be performed via bit reset, for example.

Figure 1: Part of C code where the attack is performed.

Figure 2: Machine code before and after fault injection.

One of our goals is to propose a formal model-based tool to detect faults of this type. The major difficulty is that contrary to classical security vulnerabilities such as buffer overflow, fault injection detection requires not only modelling the software but also the hardware and its interactions. Consequently, applying formal models leads to two challenging problems that are: (i) To develop techniques that produce mutations of binary code, which correspond to a hardware fault injection, and (ii) To offer techniques that analyse such code.

For Challenge (i), our tool relies on existing fault models and mutation techniques. Obtaining such models is a major effort, which is beyond the scope of this article. For challenge (ii), we propose to use model checking [3]. The approach has already been used at high-level code, but little has been done at the binary level. In fact, the main obstacles to model checking machine code are modelling a complex processor and exceptional control flow.

To bypass these issues, we used the intermediate representation of the LLVM compiler framework. LLVM is a collection of modular and reusable compiler and toolchain technologies. Such an intermediate representation offers a much simpler syntax and semantics than a high-level programming language, and thus eases a logical encoding of the verification problem considerably. For this reason, it has recently become increasingly common to analyse programs not on the source code level but on the level of compiler intermediate representation (IR) instead. This approach has several advantages: first, the IR has much simpler syntax and semantics than high-level language; second, the program that is analysed using the IR is much closer to the program that is actually executed on the computer since semantical ambiguities have already been resolved by the compiler.

The first step in our process is thus the translation of machine code to LLVM IR. There are several tools that can be used for this purpose. Our experiments show that MC-sema [L1] is a best compromise for x86 architecture. Mc-sema is a framework for translating x86 binaries into LLVM bytecode. MC-sema models x86 instructions as operations on a register context structure that contains all registers and flags, and instructions semantics are expressed as modifications of structure members. In the future, we will have to explore alternatives for other architectures. Indeed, x86 is restricted to a small number of embedded systems. Here, what matters is the proof of concept.

Now that we have the intermediate representation of our binary file, the second step is to verify it. There are several alternatives for verifying LLVM among which one finds DIVINE, or a translation to classical tool such as SPIN. As fault injection mainly introduces safety/invariant perturbations, we propose to use LLBMC [L2]. This tool employs a bounded model checking using an SMT-solver for the theory of bitvectors and arrays and thus achieves precision down to the level of single bits. Bounded model checking was originally introduced in the context of hardware and is known to be best for safety issues.

In conclusion, this paper presents a proof-of-concept approach for fault-injection analysis via formal model. It has been successfully applied to case studies, including the example given above. For the approach to be deployed one has to improve our fault models as well as to extend LLVM and Mc-sema to a wider class of instruction of x86 as well as to other architectures such as ARM, the standard for smart phones.

Links:

[L1] https://github.com/trailofbits/mcsema/

[L2] http://llbmc.org/

References:

[1] H. Bar-El, et al.: “The Sorcerer's Apprentice Guide to Fault Attacks”, in Proc. of IEEE, vol. 94, issue 2, pp:370-382, 2006.

[2] D. Gruss, C. Maurice, S. Mangard: “Rowhammer.js: A Remote Software-Induced Fault Attack in JavaScript”, 13th Conf. on DIMVA, 2016.

[3] C. Baier, JP. Katoen: “Principles of model checking”, MIT Press 2008.

Please contact:

Nisrine Jafri

Inria, France