by Christophe Ponsard, Philippe Massonet and Gautier Dallons (CETIC)

Many safety critical systems, like transportation systems, are integrating more and more software based systems and are becoming connected. In some domains, such as automotive and rail, software is gradually taking control over human operations, and vehicles are evolving towards being autonomous. Such cyber-physical systems require high assurance on two interrelated properties: safety and security. In this context, safety and security can be co-engineered based on sound techniques borrowed from goal-oriented requirements engineering (RE).

Transportation systems are increasingly relying on software for monitoring and controlling the physical word, including to assist or replace human operation (e.g., drive assistance in cars, automated train operations), resulting in higher safety-criticality. At the same time, the increasing connectivity of (cyber-physical) systems also increases their exposure to security threats, which in turn can lead to safety hazards. This calls for a co-engineering approach to security and safety [1].

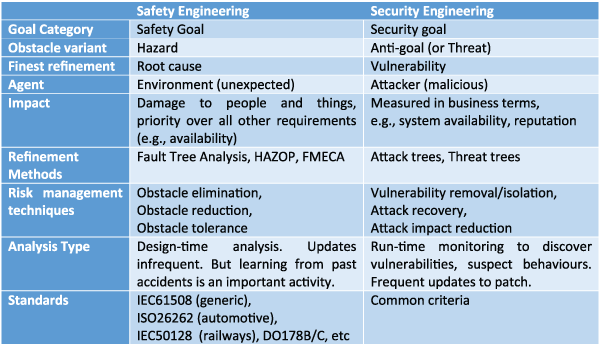

Requirements engineering (RE), a key step in any system development, is particularly important in transport systems. Over the years, very efficient methods have been developed both for dealing with safety and security. Goal-oriented requirements is a major RE approach that has developed the concept of obstacle analysis primarily to reason on safety [2]. An obstacle can be considered as any undesirable property (e.g., a train collision) that directly obstructs system goals (e.g., passenger safety) which are desired properties. This work has later been revisited to deal with security [3]. It introduced the notion of malicious agents having interest in the realisation of some anti-goal (as the dual of ‘normal’ agents cooperating to the achievement of a system goal). Table 1 compares the safety and security approaches side by side and highlights their strong common methodological ground.

While generally considered separately, we have combined safety and security approaches for co-engineering purposes – an approach that makes intuitive sense given their strong common foundations, including the ability to drive the discovery of hazards/threats and to identify ways of addressing them. A recent exhaustive survey by the MERGE ITEA 2 project showed the main trends in the area [L1]:

- Safety is at the inner core of the system, providing de facto better isolation. Security layers or different criticality levels are deployed around it.

- Models are used both for safety and security. Safety impact of security failures are considered, hence connecting the two kinds of analysis.

- Both security incidents and system failures are monitored so global system dependability can be continually evaluated.

Based on the Objectiver requirements engineering tool [L2], we experimented with some variants of co-engineering involving different roles working cooperatively on the same model: the system engineer for global system behaviour and architecture, the safety engineer to identify failure modes and their propagation and security engineers to analyse possible attacks. Proposed resolutions relating to security and safety are reviewed in a second round. Regular global validation reviews are also organised with all analysts.

Table 1: Comparison of safety and security engineering approaches.

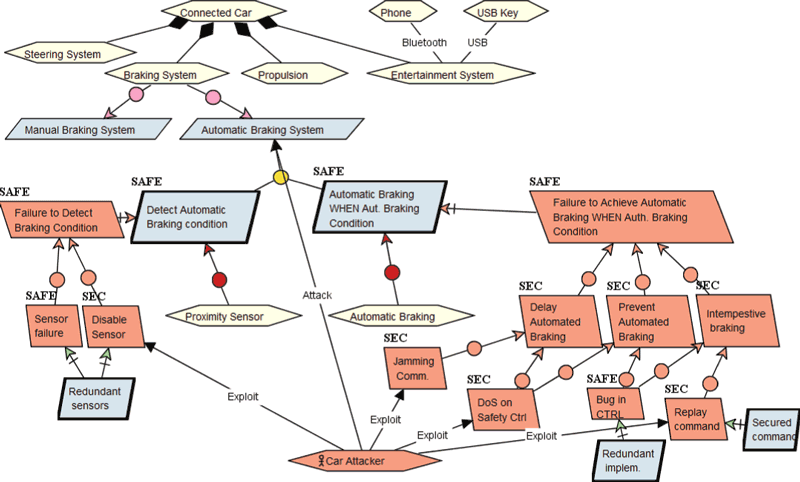

Figure 1. Attacker tree on a safety function.

Figure 1 shows an excerpt of the security/safety co-engineering of a connected car featuring automated braking based on the SAE recommended practices [L3]. The top level shows the general system structure and identifies the main sub-systems. The automated braking sub-system is then detailed based on two key milestones: condition detection and then braking. Next to each requirement, a mixed view of the result of the hazard/threat analysis is shown (these are usually presented in separate diagrams). Specific obstacles are tagged as SAFE or SEC depending on the process that identified them. Specific attacker profiles can also be captured (unique here). Some resolution techniques proposed in [2] and [3] are then applied, e.g., to make the attack unfeasible or to reduce its impact. Some resolutions can also address mixed threats and reduce the global cost to make the whole system dependable.

The next step in our research is to delve deeper into cyber-threats in the context of railway systems. On the tool side we plan to adapt our method to mainstream system engineering tools and methods, such as Capella and in the scope of the INOGRAMS and a follow-up project [L4].

Links:

[L1] http://www.merge-project.eu

[L2] http://www.objectiver.com

[L3] http://articles.sae.org/14503

[L4] https://www.cetic.be/INOGRAMS-2104

References:

[1] D. Schneider, E. Armengaud, E. Schoitsch: “Towards Trust Assurance and Certification in Cyber-, Physical Systems, SAFECOMP Workshops, 2014.

[2] A. van Lamsweerde, E. Letier: “Handling Obstacles in Goal-Oriented Requirements Engineering”, IEEE Trans. on Software Engineering, Vol. 26 No. 10, Oct. 2000.

[3] A. van Lamsweerde et al: “From System Goals to Intruder Anti-Goals: Attack Generation and Resolution for Security Requirements Engineering”, in Proc. of RHAS Workshop, 2003.

Please contact:

Christophe Ponsard

Tel: +32 472 56 90 99