by Pierre Senellart, Serge Abiteboul and Rémi Gilleron

A large part of the Web is hidden to present-day search engines, because it lies behind forms. Here we present current research (centred around the PhD thesis of the first author) on the fully automatic understanding and use of the services of the so-called hidden Web.

Access to Web information relies primarily on keyword-based search engines. These search engines deal with the 'surface Web', the set of Web pages directly accessible through hyperlinks, and mostly ignore the vast amount of highly structured information hidden behind forms that composes the 'hidden Web' (also known as the 'deep Web' or 'invisible Web'). This includes, for instance, Yellow Pages directories, research publication databases and weather information services. The purpose of the work presented here, a collaboration between researchers from INRIA project-teams Gemo and Mostrare and the University of Oxford (Georg Gottlob), is the automated exploitation of hidden-Web resources, and more precisely the discovery, analysis, understanding and querying of such resources.

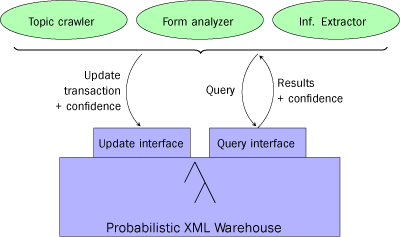

An original aspect of this approach is the avoidance of any kind of human supervision, which makes the problem quite broad and difficult. To cope with this difficulty, we make the assumption that we are working with services relevant to a specific domain of interest (eg research publications) that is described by some domain knowledge base in a predefined format (an ontology). The approach is content-centric in the sense that the core of the system consists in a content warehouse of hidden-Web services, with independent modules enriching the knowledge of these services so they can be better exploited. For instance, one module may be responsible for discovering relevant new services (eg URLs of forms or Web services), another for analysing the structure of forms and so forth.

Typically the data to be managed is rather irregular and often tree-structured, which suggests the use of a semi-structured data model. We use eXtended Markup Language (XML) since this is a standard for the Web. Furthermore, the different agents that cooperate to build the content warehouse are inherently imprecise since they typically involve either machine-learning techniques or heuristics, both of which are prone to imprecision. We have thus developed a probabilistic tree (or XML) model that is appropriate to this context. Conceptually, probabilistic trees are regular trees annotated with conjunctions of independent random variables (and their negation). They enjoy nice theoretical properties that allow queries and updates to be efficiently evaluated.

Consider now a service of the hidden Web, say an HTML form, that is relevant to the particular application domain. In order that it can be automatically reused, an understanding of various aspects of the service is necessary; that is, the structure of its input and its output, and the semantics of the function that it supports. The system first tries to understand the structure of the form and relate its fields to concepts from the domain of interest. It then attempts to understand where and how the resulting records are represented in an HTML result page. For the former problem (the service input), we use a combination of heuristics (to associate domain concepts to form fields) and probing of the fields with domain instances (to confirm or invalidate these guesses). For the latter (the service output), we use a supervised machine-learning technique adapted to tree-like information (namely, conditional random fields for XML) to correct and generalize an automatic, imperfect and imprecise annotation using the domain knowledge. As a consequence of these two steps, the structure of the inputs and outputs of the form are understood (with some degree of imprecision of course), and thus a signature for the service is obtained. It is then easy to wrap the form as a standard Web service described in Web Service Definition Language (WSDL).

Finally, it is necessary to understand the semantic relations that exist between the inputs and outputs of a service. We have elaborated a theoretical framework for discovering relationships between two database instances over distinct and unknown schemata. The problem of understanding the relationship between two instances is formalized as that of obtaining a schema mapping (a set of sentences in some logical language) so that a minimum repair of this mapping provides a perfect description of the target instance given the source instance. We are currently working on the practical application of this theoretical framework to the discovery of such mappings between data found in different sources of the hidden Web.

At the end of the analysis phase we have obtained a number of Web services, the semantics for which is explained in terms of a global schema specific to the application. These services are described in a logical Datalog-like notation that takes into account the types of input and output, and the semantic relations between them. The services can now be seen as views over the domain data, and the system can use these services to answer user queries with almost standard database techniques.

Links:

http://pierre.senellart.com/phdthesis/

http://treecrf.gforge.inria.fr/

http://r2s2.futurs.inria.fr/

Please contact:

Pierre Senellart, INRIA Futurs, France

Tel: +33 1 74 85 42 25

E-mail: pierre![]() senellart.com

senellart.com