by Harry Rudin

Digital-computer-based analysis and simulation have long been used to solve the most difficult scientific and engineering problems. As computer power hurtles along the performance curve predicted by Moore's Law these techniques have become more and more successful - and over an ever increasing spectrum of problems. Having a glimpse at these techniques success, highly parallel computer architectures (or super computers) have been developed to attack enormously complex problems. Super computers are even being used to improve super-computer technology. Here, as examples, a popular software package and progress in semiconductor analysis are discussed.

'Super computers' or better, high-performance computers (HPCs) use a similar technology to that in our laptop computers. However, HPC structure is massively parallel with sophisticated communication paths connecting the component processors with one another and with the processors memory. The trick is to take advantage of the parallel processing capability without having inordinately heavy communication overhead slow the overall system down. The right architecture along with the right software can lead to gains in processing speeds of some three orders of magnitude compared with the modern laptop.

HPC applications cover a huge area. A few examples are:

-

more accurate weather forecasting

-

modeling and analyzing atmospheric pollutant flows

-

development of new pharmaceuticals

-

analysis of ocean waves breaking on sea walls

-

designing better automobiles

-

optimizing fire-escape routes in large buildings

-

prediction of the immunological response to drugs,

-

natural catastrophe damage estimation for reinsurance,

-

flow of air over aircraft wings, and even

-

analysis of thermonuclear fusion for power generation.

These and other applications hold enormous promise for the future. Most impressive is perhaps the last, where the hope is that, beginning in a decade's time, we will see a way to electric power without the problems of radiation from nuclear waste.

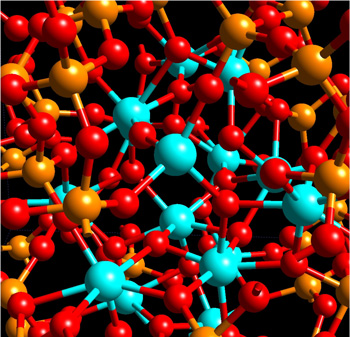

One of the techniques which is enabled by HPC is the modern application of 'Molecular Dynamics', a specially tailored form of numerical computer analysis in which the interactions of molecules and atoms with one another are simulated, based on the notions of statistical mechanics, a collection of the relevant physical laws. To uncover physical and chemical properties of a material, a simulation of a large number of atoms or molecules, say in the order of one thousand, is required. A particularly successful software system for handling such simulations was initiated at the IBM Zurich Research Laboratory and is called CPMD for Car-Parinello Molecular Dynamics. CPMD continues to be refined there and at partner institutions. The software is distributed via a license but without cost. The CPMD code has been widely used for the analysis of physical and chemical properties of many different kinds of compounds. It has proven to be so popular that it currently has over six thousand registered users, worldwide.

Recently CPMD has been applied to the problem of analyzing a new gate dielectric for improving the performance of transistors. A most promising material is hafnium dioxide. While hafnium dioxide looked to be the ideal candidate for a new gate dielectric, there were concerns that its use might lead to unforeseen consequences in semiconductor production lines. CPMD was used to fully understand the physics behind hafnium dioxide and its behavior when used together with silicon in semiconductors. Even with the use of a high-performance computer running the efficient CPMD code, the analysis was an enormous undertaking. Some fifty different models of the use of hafnium dioxide were analyzed. The models simulated the interactions of up to 600 atoms. Using the IBM Zurich Research Laboratory's Blue Gene/L supercomputer system with 4096 processors, five days were required to complete the analysis of each model. Thus some 250 supercomputer days were committed to this project. All of this would have been impossible, just one decade ago. But the result was well worth the effort: the developers can now sleep well.

In effect, this work completes another cycle: super computers are used to increase the speed of the processors needed to make super computers even more powerful. Quoting Alessandro Curioni, computational material scientist and supercomputing expert from IBM's Zurich lab: "So . we are able to use supercomputers to investigate materials that will be eventually used in the next generation of supercomputers."

Links:

http://www.cpmd.org/

http://www-03.ibm.com/press/us/en/ pressrelease/21142.wss

http://domino.watson.ibm.com/comm/pr.nsf/pages/news.20021212_supercomputer.html

Please contact:

Alessandro Curioni

IBM Zurich Research Lab, Switzerland

E-mail: cur![]() zurich.ibm.com

zurich.ibm.com