At the Chair of Computational Science at ETH Zurich, we are developing multiscale particle methods for simulating diverse physical systems [1], with an emphasis on their implementation in high-performance computing (HPC) architectures. Multiscale simulations often require a level of algorithmic complexity that is difficult to translate into effective parallel algorithms. A key aspect of our approach is the development of numerical methods with an emphasis not only on their accuracy and stability but also on their software engineering and implementation on massively parallel computer architectures.

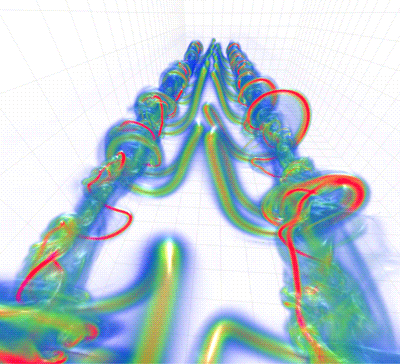

In one such example from the last two years, we have developed particle methods for the study of vortex-dominated flows. We collaborated with researchers from the IBM Zurich Research Laboratory to port these methods onto massively parallel computer architectures. This enabled state-of-the-art direct numerical simulations of aircraft vortex wakes [2], which employed billions of vortex particles and exhibited excellent scalability for up to 16,000 nodes of the IBM Blue Gene/L (BG/L).

Resurrecting Vortex Methods for HPC

This effort represents the culmination of fifteen years of research into vortex methods [2], and has combined a number of ingredients that only a few years ago were considered unsuitable for flow simulations.

We first resurrected a numerical method (vortex methods) with Lagrangian adaptivity and a minimal use of computational elements for vortical flows. Vortex methods were in fact the first computational fluid dynamic (CFD) technique employed to study fluids in the 1930s (vortex sheets were computed by hand by Rosenhead and his human 'computers'), and were also used in the first digital computer flow simulations in the 60s and 70s. Vortex methods have since fallen out of favour due to their inaccuracy, which is largely attributed to the distortion of the Lagrangian computational elements.

We then addressed the low memory available in massively parallel computer architectures such as the BG/L. This architecture was considered unsuitable for flow simulations requiring large per-processor memory allocations and extensive communications when solving for elliptic differential equations, as imposed here by our velocity-vorticity formulation.

The solution to this multiobjective problem was found by reformulating several aspects of vortex methods: coupling particles with grids to regularize their locations, developing novel data structures for the distribution of the particles, and adopting fast Poisson solvers effectively implemented in the BG/L architecture. Last but not least, the development of software libraries and open-source software (see our lab Web site) has enabled the sustained development of these tools over a number of years.

How do you look at two billion particles?

Massively parallel simulations using up to two billion particles have provided us with a unique insight into the development of long-wavelength instabilities in aircraft wakes.

At the same time, a new challenge has emerged, namely, the visualization and processing of the large data sets that become available through the simulations. Each snapshot of the flow field results in about 100 Gbytes of data, meaning the usual techniques such as flow animation and walkthrough visualization in turn become computationally intensive tasks. The continuum flow field needs to be reconstructed from the particle properties, and high-performance visualization techniques are necessary in order to process the data. We are pursuing this approach in collaboration with scientists at the Swiss National Supercomputing Center (CSCS) in Manno, aiming to provide a unique look at the intricacies of vortex structures in aircraft wakes. A parallel approach involves the use of multiresolution techniques such as wavelets, leading to in situ analysis and structure-informed visualization.

From Insight to Optimization

The increased capabilities offered by high-performance computing enable us to think beyond simulating flows to actually controlling and optimizing them. In our latest collaboration with IBM Zurich and CSCS, we employ evolutionary algorithms in order to discover vortex wake configurations that lead to fast instability growth and wake destruction. Evolutionary algorithms are well suited to parallel computer architectures, but at the same time require a large number of iterations, an issue that makes them seemingly unsuitable for expensive CFD calculations (contemplate thousands of simulations involving billions of computational elements!). We have addressed this problem by exploiting the inherent parallelism of these methods and employing machine-learning techniques to increase their convergence. We are currently discovering vortex configurations that can assist engineering intuition in designing next-generation aircraft.

Next Steps

Our particle framework is being extended to problems ranging from geomechanics to cancer treatments and nanofluidics. We believe in the integration of computer science expertise with mathematics and application domains, and in collaborative platforms that will become essential for effective high-performance computing. We must be reminded that "ta panta rei" (everything flows/ changes) even in the case of supercomputing but we believe that revisiting the sciences with the HPC lens will provide us with unexpected treasures.

Links:

http://www.cse-lab.ethz.ch

[1] Multiscale Flow Simulations Using Particle Methods:

http://arjournals.annualreviews.org/doi/abs/10.1146/annurev.fluid.37.061903.175753

[2] Billion Vortex Particle Direct Numerical Simulations of Aircraft Wakes:

http://dx.doi.org/10.1016/j.cma.2007.11.016

Please contact:

Petros Koumoutsakos

Chair of Computational Science, ETH Zurich, Switzerland

Tel: +41 44 632 52 58

E-mail: petros![]() ethz.ch

ethz.ch