The Austrian Institute of Technology has developed a stereo vision system that monitors and counts pedestrians and cyclists in real-time. This vision system includes a processing unit with embedded intelligent software for scene analysis, object classification and counting.

Europe has one of the highest population densities in the world, mostly concentrated in urban areas. The human activities in the countries of the European Union produce about 14.7 % of the worlds’ CO2 emissions, ranking the European Union third highest emitter after USA and China. Road traffic is one of the principal contributors of CO2 emissions mainly due to an aging car fleet on Europe’s roads, growing congestion, increasingly dense traffic, a lack of traffic management, slow infrastructure improvement and a rise in vehicle mileage.CO2 is not a toxic emission for the population like the pollutants (NOx, PMx, SO2, CO) and it is therefore not regulated by the EC at the moment. However, CO2 emissions contribute to climate change.

Current investigations on cities of the future aim, among others, to include concepts for the reduction of the number of cars on urban roads and implementation of widely available energy efficient public transportation. These investigations also deal with creating city designs that combine living, working, shopping and entertainment, and which are more pedestrian- and cyclist-friendly.

In other words, the mobility approaches of the future cities will concentrate on walking, biking and using available public transportation. Therefore, it is very important to act early in establishing new technologies, tools and approaches for monitoring and management of future urban traffic to maximize safety and security of citizens.

Within the framework of a national research activity, AIT has developed an event-based stereo vision system for real-time outdoor monitoring and counting of pedestrians and cyclists. This vision system comprises a pair of dynamic vision detector chips, auxiliary electronics and a standalone processing unit embedding intelligent software for scene analysis, object classification and counting.

The detector chip consists of an array of 128x128 pixels built in a standard 0.35μm CMOS-technology. The detector aims to duplicate biological vision with an array including a set of autonomous self-spiking pixels reacting to relative light intensity changes by asynchronously generating events. Its advantages include high temporal resolution, extremely wide dynamic range and complete redundancy suppression due to included on-chip preprocessing. It exploits very efficient asynchronous, event-driven information encoding for capturing scene dynamics (eg moving objects).

The processing unit embeds algorithms for calculating the depth information of the generated events by stereo matching. It also embeds a spatiotemporal processing method for the robust analysis of visual data encompassing dynamic processes such as motion, variable shape, and appearance enabling real-time object (pedestrian or cyclist) classification and counting. The whole system has been evaluated in real (outdoor) surveillance scenarios as a compact remote standalone vision for pedestrians and cyclists.

Figure 1: Image of the event-based stereo vision system.

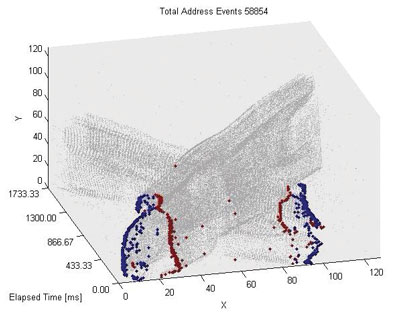

Figure 2: Raw data from the event-based detector spatiotemporally represented.

Figure1 depicts the event-based stereo vision system including the pair of detector chips, the optics, the auxiliary electronics and the processor core module mounted on the backside of the board (not visible on the picture). The events generated by one detector chip are represented in Figure2 for two persons crossing the sensor field of view over a time period of 1.73 sec. The asynchronous nature of these events allows an ideal spatiotemporal representation and processing of the data. Figure3 shows the processing steps and results for counting pedestrians and cyclists. An image of the scene using a standard camera is shown on the left. Its corresponding data using one event-based sensor is rendered in an image-like representation (second- left). The middle images show the processing results of the detection and of the stereo matching. The right image depicts the tracking results, which allow the pedestrians (brown track) and cyclists (red track) to be counted.

Figure 3: Illustration of process for detection and counting pedestrian.

Link: http://www.ait.ac.at

Please contact:

Ahmed Nabil Belbachir, Norbert Brändle, Stephan Schraml, Erwin Schoitsch

AIT Austrian Institute of Technology / AARIT, Austria

E- mail: