by Adrian Stanciulescu, Jean Vanderdonckt, Benoit Macq

Multimodal Web applications provide end-users with a flexible user interface (UI) that allows graphical, vocal and tactile interaction. As experience in developing such multimodal applications grows, the need arises to identify and define major design options of these applications in order to pave the way of designers to a structured development life cycle.

At the Belgian Laboratory of Computer-Human Interaction (BCHI), Université catholique Louvain, we argue that developing consistent and usable multimodal UIs (involving a combination of graphics, audio and haptics) is an activity that would benefit from the application of a methodology which is typically composed of: (1) a set of models gathered in an ontology, (2) a method which manipulates the involved models, and (3) tools that implement the defined method.

The Models

Our methodology considers a model-based approach where the specification of the UI is shared between a set of implementation-independent models, each model representing a facet of the interface characteristics. In order to encourage user-centred design, we take the task and domain models into consideration right from the initial design stage. The approach involves three steps towards a final UI: deriving the abstract UI from the task and domain models, deriving the concrete UI from the abstract one, and producing the code of the corresponding final UI. All the information pertaining to the models describing the future UI is specified in the same UI Description Language: UsiXML.

The Method

Our method considers all stages of the software development life cycle to be covered according to design principles, from early requirements through to prototyping and coding. This approach benefits from a design space that explicitly guides the designer to choose values of design options appropriate to the multimodal UIs, depending on parameters. In order to support these aspects our approach is based on a catalogue of transformation rules implemented as graphs.

The Tool

We developed MultiXML, an assembly of five software modules, to explicitly support the design space. In order to reconcile computer support and human control, we adopt a semi-automatic approach in which certain repetitive tasks are executed, partially or totally, while still offering some level of control to the designer. This involves:

- manual selection of design option values by the designer, with the possibility of modifying this configuration at any time

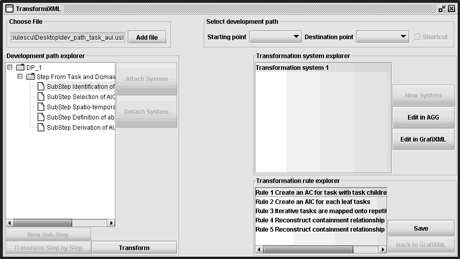

- automatic picking of transformation rules from the transformation catalogue corresponding to the selected options, followed by their application by TransformiXML module (see Figure 1) to reduce the design effort.

Case Studies

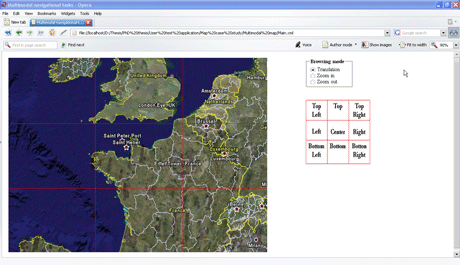

The described methodology was tested on a couple of case studies using the Opera browser embedded with IBM Multimodal Runtime Environment. One of these applications considers a large-scale image application (see Figure 2) that enables users to browse a 3x3 grid map (ie translate, zoom in or zoom out) using an instruction pattern composed of three elements: Action, Parameter Y and Parameter X. The X and Y parameters identify the coordinates of the grid unit over which the action is applied (eg Action = <<translate>>, Parameter Y = <<top>>, Parameter X = <<left>>). The application enables users to specify each element of the pattern by employing one of the graphical, vocal and tactile modalities or a combination of them.

Motivations

One of the main advantages of our design space is the independence of any existing method or tool, making it useful for any developer of multimodal UIs. The design options clarify the development process by providing options in a structured way. This method strives for consistent results if similar values are assigned to design options in similar circumstances, and also means that less design effort is required. Every piece of development is reflected in a concept or notion that represents some abstraction with respect to the code level, as in a design option. Conversely, each design option is defined clearly enough to drive the implementation without requiring any further interpretation effort. Moreover, the adoption of a design space supports the tractability of more complex design problems or a class of related problems.

The Project

The current work was conducted in the context of the Special Interest Group on Context-Aware Adaptation of the SIMILAR project. The group began in December 2003 and was coordinated by BCHI. Five other partners were involved: ICI, ISTI-CNR, Universiy Joseph Fourier, University of Castilla-La Mancha and University of Odense. The goal of the group was to define, explore, implement and assess the use and the switching of modalities to produce interactive applications that are aware of the context of use in which they are executed. They can then be adapted according to this context so as to create UIs that remain the most usable. In the future, BCHI will continue working on the design, implementation and evaluation of Ambient Intelligence systems as a member of the ERCIM Working Group Smart Environments and Systems for Ambient Intelligence (SESAMI).

Links:

http://www.isys.ucl.ac.be/bchi

http://www.usixml.org

http://www.similar.cc

http://www.ics.forth.gr/sesami/index.html

Please contact:

Adrian Stanciulescu, Jean Vanderdonckt and Benoit Macq

Université catholique de Louvain, Belgium

Tel: +32 10 47 83 49, +32 10 47 85 25, +32 10 47 22 71

E-mail: stanciulescu![]() isys.ucl.ac.be, vanderdonckt

isys.ucl.ac.be, vanderdonckt![]() isys.ucl.ac.be, benoit.macq

isys.ucl.ac.be, benoit.macq![]() uclouvain.be

uclouvain.be