by Barnabás Takács

By merging the power of animation, virtual reality, artificial intelligence and perception to create a novel educational content delivery platform, researchers at the Virtual Human Interface Group of SZTAKI are raising the experience of learning to a new level.

Ambient Facial Interfaces (AFIs) provide visual, non-verbal feedback via photo-realistic animated faces. They display facial expressions and body language that is reliably recognizable. These high-fidelity digital faces are controlled by the interaction parameters and physical data derived from the state of the user, in our case the student. The output of an AFI system combines these measurements into a single facial expression that is displayed to the user, thereby reacting to their behaviour in a closed-loop manner.

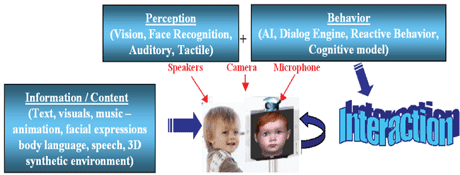

The Virtual Human Interface Group of SZTAKI has created an interactive educational tool called BabyTeach, with the goal of demonstrating a new kind of user interface to support education. At the centre of this application there is a virtual baby face that reacts to the students with its elaborate repertoire of facial expressions, mimicking and gestures. These positive or negative expressions help reinforce the educational content. Figure 1 shows a schematic of the structure of our application.

The system runs face recognition algorithms to determine not only if there are users with whom to interact, but also whether it is a single child, a child with an accompanying adult or just a grown-up standing in front of the system. Based on this information our virtual baby uses different strategies to help learning. This form of non-verbal feedback based on visual perception is supplemented by the tactile subsystem in the form of a touch screen.

Emotional Modulation is a technique that helps transform information into knowledge using emotions as the primary catalyst. The Artificial Emotion Space (AES) algorithm as part of the BabyTeach system is the manifestation of a simple everyday observation: that when we are in a good mood, we are generally more susceptible to information presented in a positive fashion, while when we are sad or down, we prefer things in more subdued manner. This simple notion of empathy presupposes i) recognition of the students state of mind, and ii) a mechanism to replicate it in the animation domain. As an example, the digital child may exhibit layers of its own emotions that coincide with, react to, or alternatively oppose the students mood. Via this coupling mechanism the system is capable of provoking emotions in relation to the presented learning material. By associating positive and negative feedback, this emotional modulation algorithm serves as a powerful method to improve a students ability to absorb information in general, and educational materials in particular.

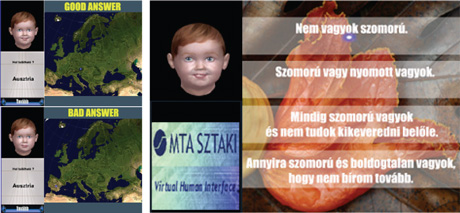

The BabyTeach program package builds upon the emotional modulation and non-verbal feedback techniques described above to teach geography and help students of all ages to take tests. The system operates by prompting a series of questions and asking the user to indicate the correct answer by moving a pointer using the mouse. The animated childs face appears in the upper left-hand corner and expresses encouraging or disappointed facial expressions according to the answers. As an example, when the mouse moves toward the proper answer it gently leans forward or nods as a form of acknowledgment. Once a selection is made, immediate feedback regarding the choice is displayed as a smile or tweaking mouth.

Figure 2 demonstrates this process with a test map of Europe. During learning students may make many attempts while the babys face gently guides them towards the correct answer. Apart from the positive effects of emotional modulation on learning, this application also involves the motor system, thereby further helping to memorize spatial locations. There are multiple levels of difficulty in which the system offers a greater or lesser degree of context and more or fewer details. The figure shows an average level difficulty, where the borders of countries are shown. Easier tests would contain cities and other landmarks, while the most difficult task involves only the map with no borders drawn. During training, the BabyTeach system also offers cues after a preset number of incorrect answers. In particular, the correct answers will be shown for the student to study and the same question later asked. As a result of the positive facial feedback the student may explore the map by continuously holding the mouse down. The facial feedback of the virtual child will indicate how close he or she is to the country of choice. Of course, when in test mode, no facial feedback is available. However, this mechanism proved to be very successful in helping children to learn geography in a fast and pleasurable manner.

Interaction can occur by touching on-screen visual elements in response to learning exercises, but students may also reach for the virtual baby's face, which upon noticing it is being touched reacts accordingly. To have some fun while learning, the BabyTeach application also lets the students put finger-paint on by changing the colour and properties of the underlying skin. This is shown in Figure 3.

The BabyTeach system also incorporates an advanced multi-user streaming architecture that allows interactive content to be delivered to mobile phones and Internet-based viewers. This feature further opens up the possibility of helping learners of all ages to realize their dreams in schools, colleges and universities or from the comfort of their homes.

Our results indicate that the BabyTeach approach provides a novel platform for presenting educational content to learners of all ages. This experimentally evaluated human-centered user interface employs high-fidelity, animated digital faces and advances the state of the art in educational technology. Our solution is based on the mechanisms of closed-loop dialogues and advanced perceptive capabilities with artificial intelligence. We have developed novel algorithms for emotional modulation, a technique based on the cognitive dynamics of learning and interaction. This helps to increase the efficiency of the learning process while creating positive reinforcement and an interactive experience. The final outcome is the students increased ability to absorb new information.

Link:

http://www.vhi.sztaki.hu

Please contact:

Barnabás Takács

SZTAKI, Hungary

Tel: +36 1 279 6000

E-mail: BTakacs![]() sztaki.hu

sztaki.hu