by Bernt Meerbeek, Jettie Hoonhout, Peter Bingley and Albert van Breemen

Can a robot cat be a buddy for children and adults? Philips Research in the Netherlands developed iCat, a prototype of an emotionally intelligent user-interface robot. It can be used as a game buddy for children or as a TV assistant, or play many other roles.

The AmI Paradigm

Developed in the late 1990s, the AmI paradigm presents a vision for digital systems from 2010 onwards. Current technological developments enable the integration of electronics into the environment, thus providing people with the possibility to interact with their environment in a seamless, trustworthy and easy to use manner. This implies that embedding-through-miniaturisation is the main systems design objective from a hardware point of view. In software we study context awareness, ubiquitous access, and natural interaction. The user benefits are aimed at improving the quality of peoples lives by creating a desired atmosphere and providing appropriate functionality by means of intelligent, personalised and interconnected systems and services.

The iCat Concept

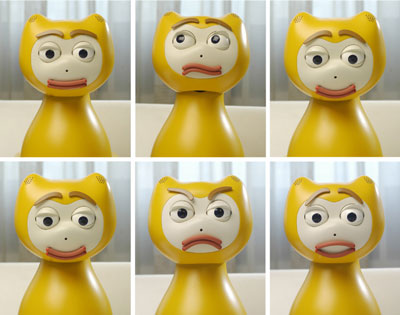

Emotional robots are generally considered as a new and promising development for the intuitive interaction between users and AmI environments. iCat was developed as an open interactive robot platform with emotional feedback to investigate social interaction aspects between users and domestic robots with facial expressions; see Figure 1.

iCat is a 38cm tall cat-like robot character. The head contains 13 servomotors that can move the head and different parts of the face, including eyebrows, eyes, eyelids, and mouth. The servomotors can generate facial expressions, which give the robot socially intelligent features; see Figure 2. Through a camera in the nose, iCat can recognise objects and faces using computer vision techniques. Each foot contains a microphone that can identify sounds, recognise speech, and determine the direction of the sound source. A speaker in the bottom can play sounds (WAV and MIDI files) and connected speech. iCat can be connected to a home network to control domestic appliances, and to the Internet to obtain information. iCat can sense touch through sensors in its feet. It can communicate information encoded by coloured light through multi-color LEDs in its feet and ears. For instance, the LEDs in the ears can indicate different modes of operation such as sleeping, awake, busy, and listening.

Psychology meets Technology

One of the key research questions for iCat is to find out whether facial expressions and a range of behaviours can give the robot a personality and which type of personality would be most appropriate during interaction with users for certain applications. Research with iCat has focused on the evaluation of application concepts for iCat, for example as a game buddy for children or as a TV assistant. The research questions in these studies were: "What personality do users prefer?" and "What level of control do they prefer?"

The results of the user study with the iCat as game buddy indicated that children preferred to play games with the iCat rather than to play these same games with a computer. They were able to recognise differences in personalities between differently programmed versions of iCat. Overall, the more extrovert and sociable iCat was preferred to a more neutral personality.

Young and middle-aged adults were also able to recognise differences in personality in iCat as a TV assistant. In this study two personalities were combined with two levels of control. In the high control condition, iCat used a speech-based command-and-control interaction style, whereas in the low control condition it used a speech-based, system-initiated natural language dialogue style. The preferred combination was an extrovert and friendly personality with low user-control.

One of the most interesting results was that the personality of the robot influenced the level of control that people perceived. This is very relevant in the context of intelligent systems that work autonomously to take tedious tasks out of the hands of humans. It suggests that the robot's personality can be used as a means to increase the amount of control that users perceive.

Conclusion

The studies with iCat have shown that mechanically-rendered emotions and behaviours can have a significant effect on the way users perceive and interact with robots. Moreover, users prefer to interact with a socially-intelligent robot for a range of applications, compared to more conventional interaction means. A range of further studies is planned. One of the questions that will be addressed is how iCat should behave to inspire trust and compliance in users, important if one thinks of the robot cat as a personal (health) trainer, for example.

Links:

http://www.hitech-projects.com/icat

Please contact:

Bernt Meerbeek, Philips Research Europe, The Netherlands

Tel: +31 40 27 47535

E-mail: bernt.meerbeek![]() philips.com

philips.com

Albert van Breemen, Philips Research Europe, The Netherlands

Tel: +31 40 27 47864

E-mail: albert.van.breemen![]() philips.com

philips.com