by Martin Leucker

Ultimately, a safety-critical software system should meet its safety requirements if it is continuously monitored, and corrected when safety violations are detected. We present Runtime Verification and Runtime Reflection as promising techniques that respectively monitor and steer safety-critical systems so that they always meet their safety requirements.

While success has been had with program analysis techniques that identify possible flaws in a program, and automatic verification techniques like model checking, there is always the risk that an a priori verified program behaves slightly differently - and faultily - at runtime. This may simply be the result of compiler bugs, or it may be due to mismatches between the expected and actual behaviour of the execution environment, say with respect to timing issues or memory behaviour. Moreover, the emerging scheme of 'software as services', in which applications are established by composing software services provided by different parties at runtime, and likewise highly adaptive systems, render the analysis of such systems prior to execution next to impossible.

Runtime verification is a lightweight verification technique that complements traditional techniques such as model checking and testing. It checks whether the current execution of a system under scrutiny satisfies or violates a given correctness property. One of the main distinguishing features of runtime verification is that as the name suggests it is performed at runtime. This opens up the possibility not only to detect incorrect behaviour of a software system but to react whenever misbehaviour is encountered. This is addressed within runtime reflection.

Checking whether an execution meets a correctness property is typically performed using a monitor. In its simplest form, a monitor decides whether the current execution satisfies a given correctness property by outputting either yes/true or no/false. More detailed assessments, like the probability with which a given correctness property is satisfied, can also be given.

In runtime verification, monitors are typically generated automatically from some high-level specification. As runtime verification has its roots in model checking, often some variant of linear temporal logic is employed. In practice however, one might use more readable languages such as SALT (Structured Assertion Language for Temporal Logic), which can automatically be translated to lower-level logics.

Besides checking safety properties directly using the monitors generated from them, runtime verification can also be used with partially verified systems. Such partial correctness proofs often depend on assumptions made about the behaviour of the environment. These can be easily checked using runtime verification techniques.

Runtime verification itself deals (only) with detecting whether correctness properties are violated (or satisfied). Thus, if a violation is observed, it typically does not influence or change the program's execution, say by trying to repair the observed violation.

Runtime reflection (RR) is an architecture pattern for the development of safety-critical and reliable systems. It follows the well-known FDIR scheme, which stands for Failure Detection, Identification and Recovery. The general idea of FDIR is that detecting a fault in a system does not typically identify the failure: for example, there might be different reasons why a monitored client does not follow a certain protocol, such as that it uses an old version of the protocol. If this is identified as the failure, a reconfiguration may switch the server to work with the old version of the protocol.

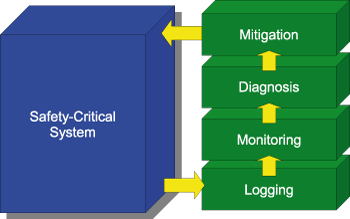

Within runtime reflection, the FDIR scheme is instantiated using runtime verification, diagnosis, and mitigation. The logical architecture of an application following the runtime reflection pattern is shown in Figure 1, in which we see that the monitoring layer is preceded by a logging layer. The role of the logging layer is to observe system events and to provide them in a suitable format for the monitoring layer. The logging layer can be realized either by adding code annotations within the system to be built, or as separated stand-alone loggers, logging for example network traffic.

The monitoring layer takes care of fault detection and consists of a number of monitors that observe the stream of system events provided by the logging layer. Its task is to detect the presence of faults in the system without actually affecting its behaviour. In runtime reflection, it is assumed to be implemented using runtime verification techniques. If a violation of a correctness property is detected in some part of the system, the generated monitors will respond with an alarm signal for subsequent diagnosis.

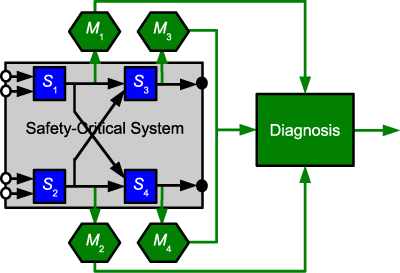

Following FDIR, we separate the detection of faults from the identification of failures. The diagnosis layer collects the verdicts of the distributed monitors and deduces an explanation for the current system state. For this purpose, the diagnosis layer may infer a (minimal) set of system components, which must be assumed to be faulty in order to explain the currently observed system state. The procedure is based solely upon the results of the monitors and general information on the system. Thus, the diagnostic layer is not directly communicating with the application. It can easily be implemented in a generic manner based on SAT solving techniques. An example of the logical architecture of a reflective system in shown in Figure 2.

The results of the system's diagnosis are then used to recover the system and if possible mitigate the failure. However, depending on the diagnosis and the particular failure, it may not always be possible to re-establish a particular system behaviour. In some situations, such as the occurrence of fatal errors, a recovery system may only be able to store detailed diagnosis information for offline treatment.

In cooperation with NASA JPL, runtime verification and runtime reflection techniques are currently being enhanced and tailored for application in the embedded systems world, as found for example in the automotive sector.

Links:

http://www.runtime-verification.org

http://runtime.in.tum.de

Please contact:

Martin Leucker

Technical University Munich, Germany

Tel: +49 89 289 17376

E-mail: leucker![]() informatik.tu-muenchen.de

informatik.tu-muenchen.de